Publications

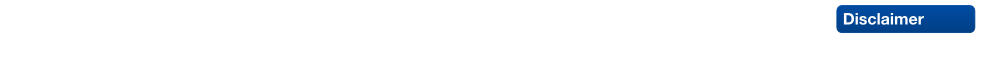

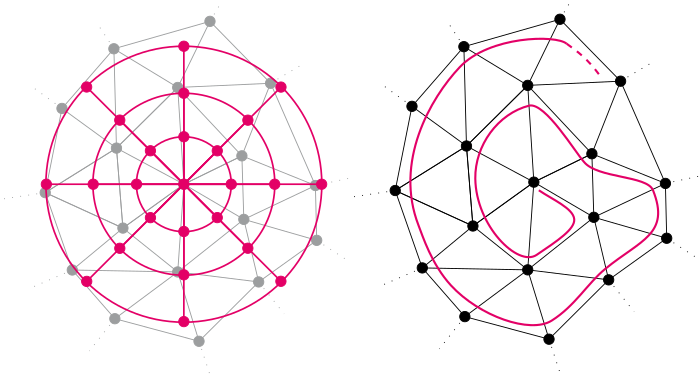

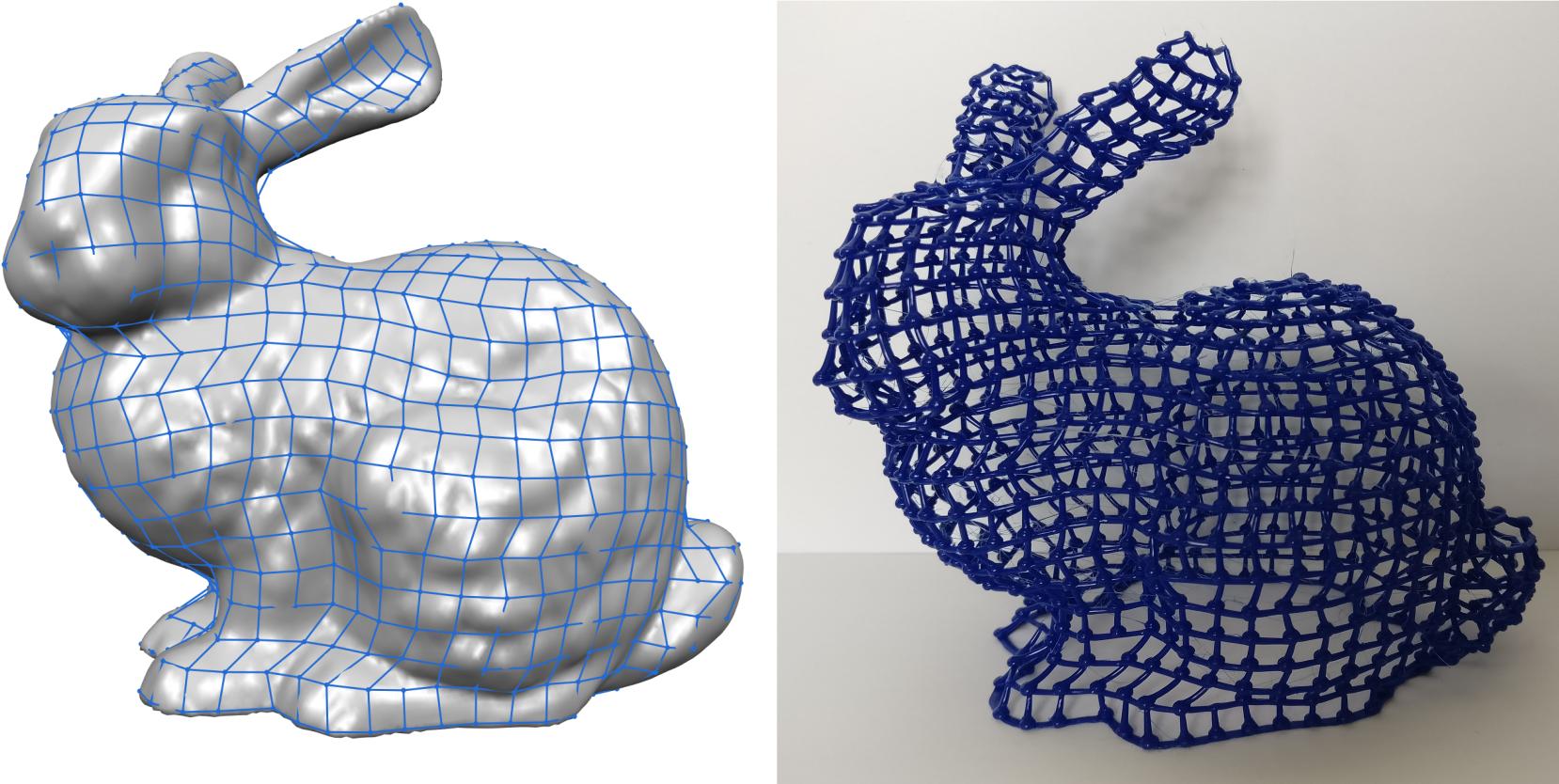

Singularity-Constrained Octahedral Fields for Hexahedral Meshing

Despite high practical demand, algorithmic hexahedral meshing with guarantees on robustness and quality remains unsolved. A promising direction follows the idea of integer-grid maps, which pull back the Cartesian hexahedral grid formed by integer isoplanes from a parametric domain to a surface-conforming hexahedral mesh of the input object. Since directly optimizing for a high-quality integer-grid map is mathematically challenging, the construction is usually split into two steps: (1) generation of a surface-aligned octahedral field and (2) generation of an integer-grid map that best aligns to the octahedral field. The main robustness issue stems from the fact that smooth octahedral fields frequently exhibit singularity graphs that are not appropriate for hexahedral meshing and induce heavily degenerate integer-grid maps. The first contribution of this work is an enumeration of all local configurations that exist in hex meshes with bounded edge valence, and a generalization of the Hopf-Poincaré formula to octahedral fields, leading to necessary local and global conditions for the hex-meshability of an octahedral field in terms of its singularity graph. The second contribution is a novel algorithm to generate octahedral fields with prescribed hex-meshable singularity graphs, which requires the solution of a large non-linear mixed-integer algebraic system. This algorithm is an important step toward robust automatic hexahedral meshing since it enables the generation of a hex-meshable octahedral field.

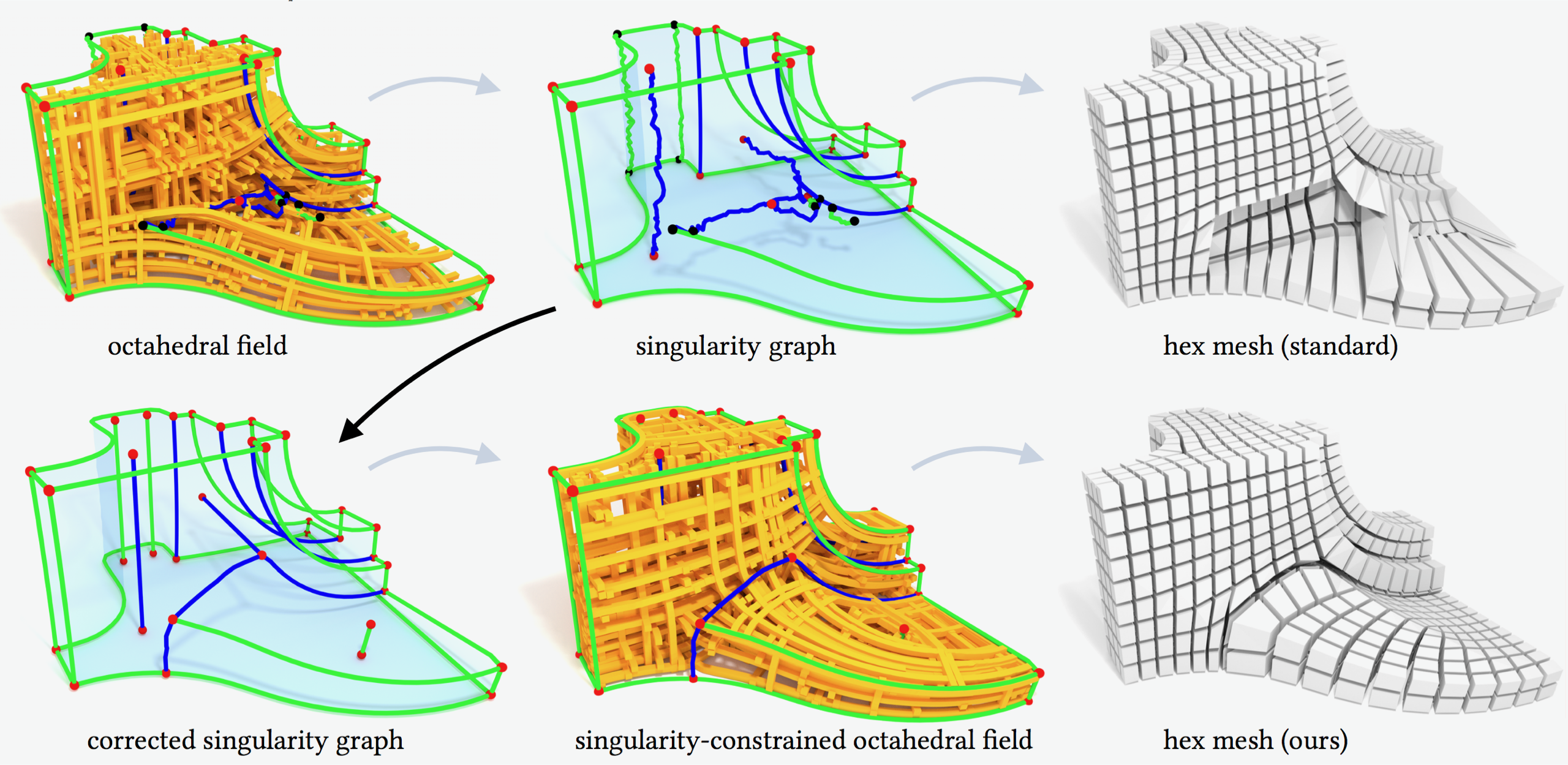

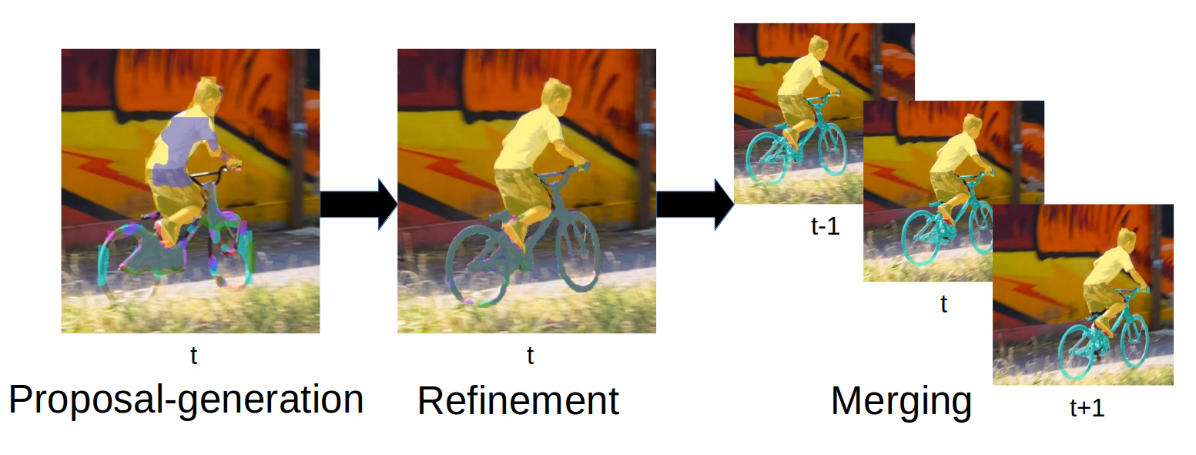

PReMVOS: Proposal-generation, Refinement and Merging for Video Object Segmentation

We address semi-supervised video object segmentation, the task of automatically generating accurate and consistent pixel masks for objects in a video sequence, given the first-frame ground truth annotations. Towards this goal, we present the PReMVOS algorithm (Proposalgeneration, Refinement and Merging for Video Object Segmentation). Our method separates this problem into two steps, first generating a set of accurate object segmentation mask proposals for each video frame and then selecting and merging these proposals into accurate and temporally consistent pixel-wise object tracks over a video sequence in a way which is designed to specifically tackle the difficult challenges involved with segmenting multiple objects across a video sequence. Our approach surpasses all previous state-of-the-art results on the DAVIS 2017 video object egmentation benchmark with a J & F mean score of 71.6 on the test-dev dataset, and achieves first place in both the DAVIS 2018 Video Object Segmentation Challenge and the YouTube-VOS 1st Large-scale Video Object Segmentation Challenge.

@inproceedings{luiten2018premvos,

title={PReMVOS: Proposal-generation, Refinement and Merging for Video Object Segmentation},

author={Jonathon Luiten and Paul Voigtlaender and Bastian Leibe},

booktitle={Asian Conference on Computer Vision},

year={2018}

}

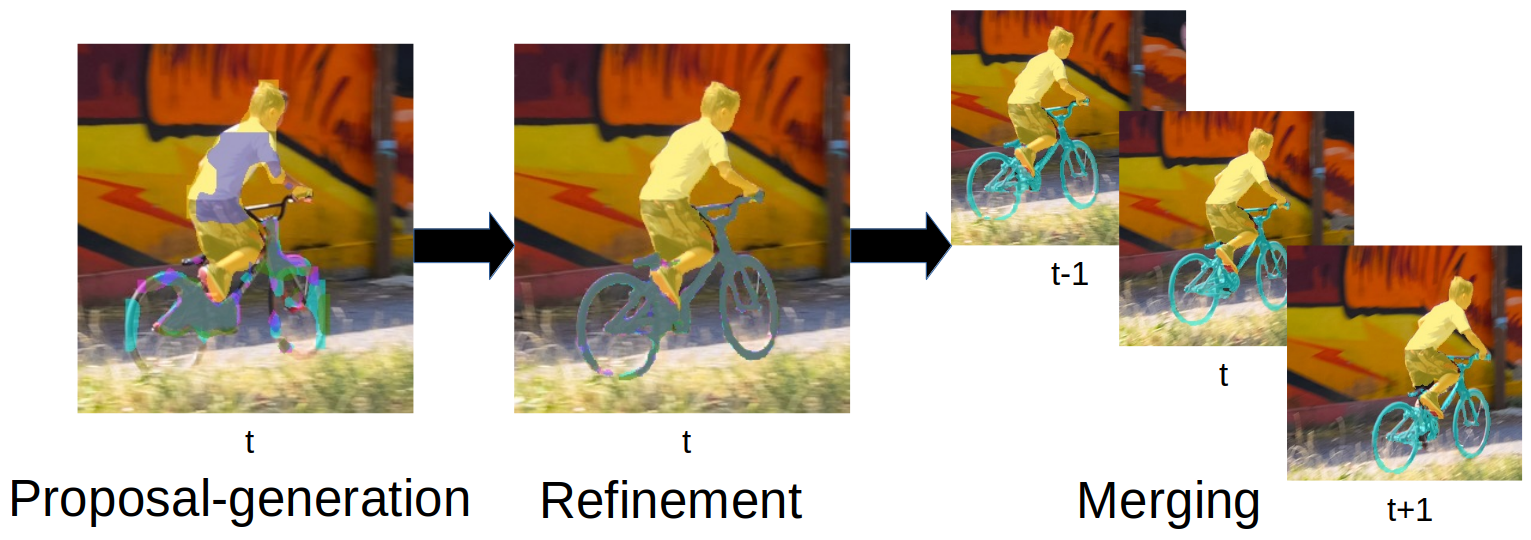

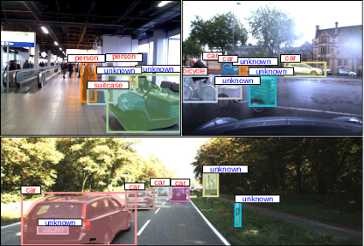

Track, then Decide: Category-Agnostic Vision-based Multi-Object Tracking

The most common paradigm for vision-based multi-object tracking is tracking-by-detection, due to the availability of reliable detectors for several important object categories such as cars and pedestrians. However, future mobile systems will need a capability to cope with rich human-made environments, in which obtaining detectors for every possible object category would be infeasible. In this paper, we propose a model-free multi-object tracking approach that uses a category-agnostic image segmentation method to track objects. We present an efficient segmentation mask-based tracker which associates pixel-precise masks reported by the segmentation. Our approach can utilize semantic information whenever it is available for classifying objects at the track level, while retaining the capability to track generic unknown objects in the absence of such information. We demonstrate experimentally that our approach achieves performance comparable to state-of-the-art tracking-by-detection methods for popular object categories such as cars and pedestrians. Additionally, we show that the proposed method can discover and robustly track a large variety of other objects.

@article{Osep18ICRA,

author = {O\v{s}ep, Aljo\v{s}a and Mehner, Wolfgang and Voigtlaender, Paul and Leibe, Bastian},

title = {Track, then Decide: Category-Agnostic Vision-based Multi-Object Tracking},

journal = {ICRA},

year = {2018}

}

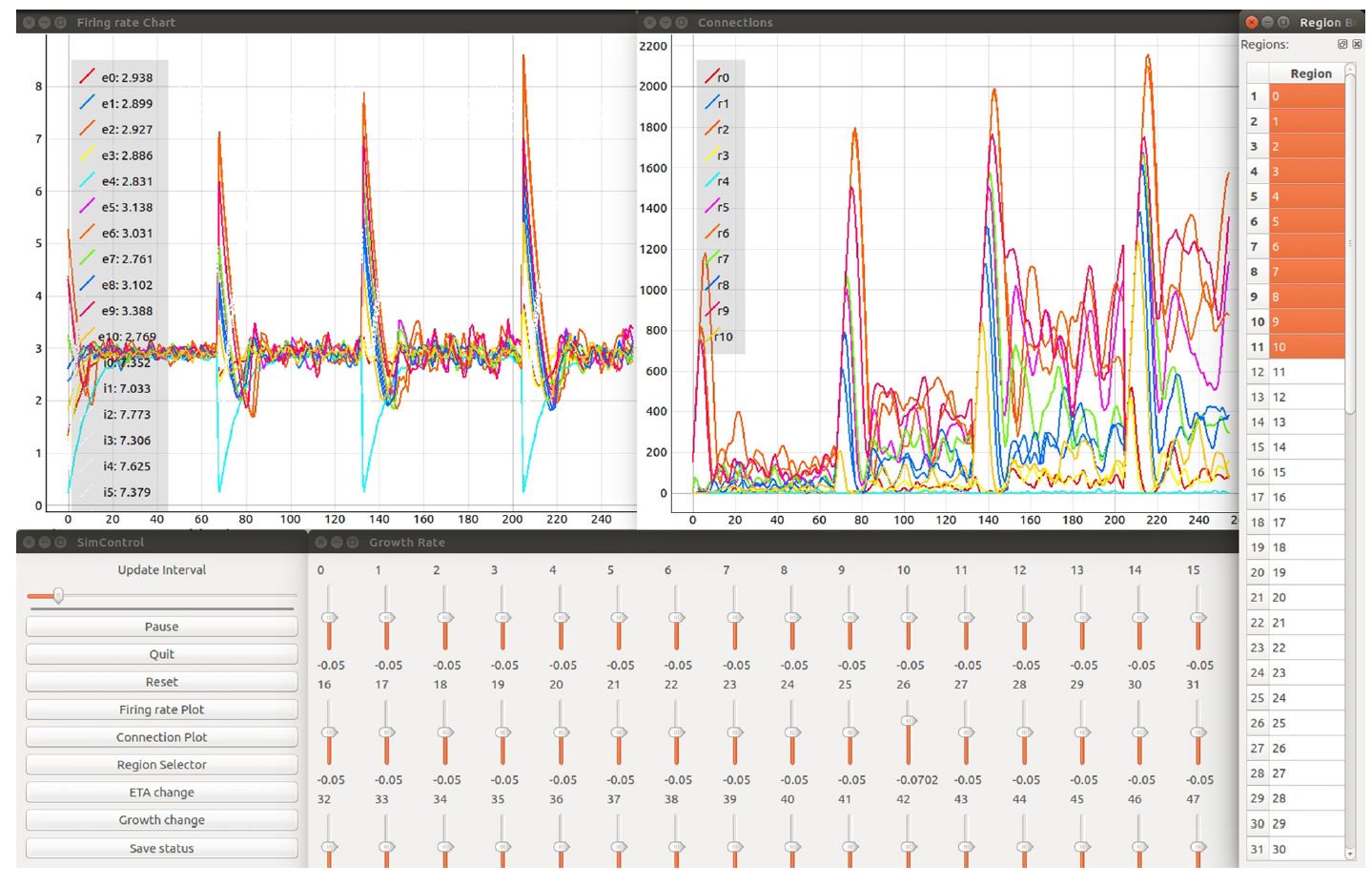

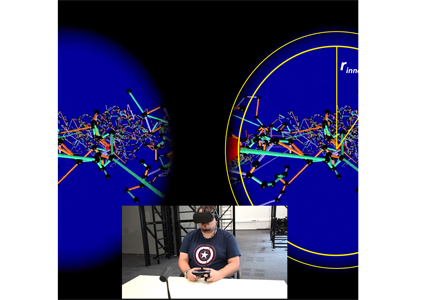

Toward Rigorous Parameterization of Underconstrained Neural Network Models Through Interactive Visualization and Steering of Connectivity Generation

Simulation models in many scientific fields can have non-unique solutions or unique solutions which can be difficult to find. Moreover, in evolving systems, unique ?nal state solutions can be reached by multiple different trajectories. Neuroscience is no exception. Often, neural network models are subject to parameter fitting to obtain desirable output comparable to experimental data. Parameter fitting without sufficient constraints and a systematic exploration of the possible solution space can lead to conclusions valid only around local minima or around non-minima. To address this issue, we have developed an interactive tool for visualizing and steering parameters in neural network simulation models. In this work, we focus particularly on connectivity generation, since ?nding suitable connectivity configurations for neural network models constitutes a complex parameter search scenario. The development of the tool has been guided by several use cases—the tool allows researchers to steer the parameters of the connectivity generation during the simulation, thus quickly growing networks composed of multiple populations with a targeted mean activity. The flexibility of the software allows scientists to explore other connectivity and neuron variables apart from the ones presented as use cases. With this tool, we enable an interactive exploration of parameter spaces and a better understanding of neural network models and grapple with the crucial problem of non-unique network solutions and trajectories. In addition, we observe a reduction in turn around times for the assessment of these models, due to interactive visualization while the simulation is computed.

@ARTICLE{10.3389/fninf.2018.00032,

AUTHOR={Nowke, Christian and Diaz-Pier, Sandra and Weyers, Benjamin and Hentschel, Bernd and Morrison, Abigail and Kuhlen, Torsten W. and Peyser, Alexander},

TITLE={Toward Rigorous Parameterization of Underconstrained Neural Network Models Through Interactive Visualization and Steering of Connectivity Generation},

JOURNAL={Frontiers in Neuroinformatics},

VOLUME={12},

PAGES={32},

YEAR={2018},

URL={https://www.frontiersin.org/article/10.3389/fninf.2018.00032},

DOI={10.3389/fninf.2018.00032},

ISSN={1662-5196},

ABSTRACT={Simulation models in many scientific fields can have non-unique solutions or unique solutions which can be difficult to find.

Moreover, in evolving systems, unique final state solutions can be reached by multiple different trajectories.

Neuroscience is no exception. Often, neural network models are subject to parameter fitting to obtain desirable output comparable to experimental data. Parameter fitting without sufficient constraints and a systematic exploration of the possible solution space can lead to conclusions valid only around local minima or around non-minima. To address this issue, we have developed an interactive tool for visualizing and steering parameters in neural network simulation models.

In this work, we focus particularly on connectivity generation, since finding suitable connectivity configurations for neural network models constitutes a complex parameter search scenario. The development of the tool has been guided by several use cases -- the tool allows researchers to steer the parameters of the connectivity generation during the simulation, thus quickly growing networks composed of multiple populations with a targeted mean activity. The flexibility of the software allows scientists to explore other connectivity and neuron variables apart from the ones presented as use cases. With this tool, we enable an interactive exploration of parameter spaces and a better understanding of neural network models and grapple with the crucial problem of non-unique network solutions and trajectories. In addition, we observe a reduction in turn around times for the assessment of these models, due to interactive visualization while the simulation is computed.}

}

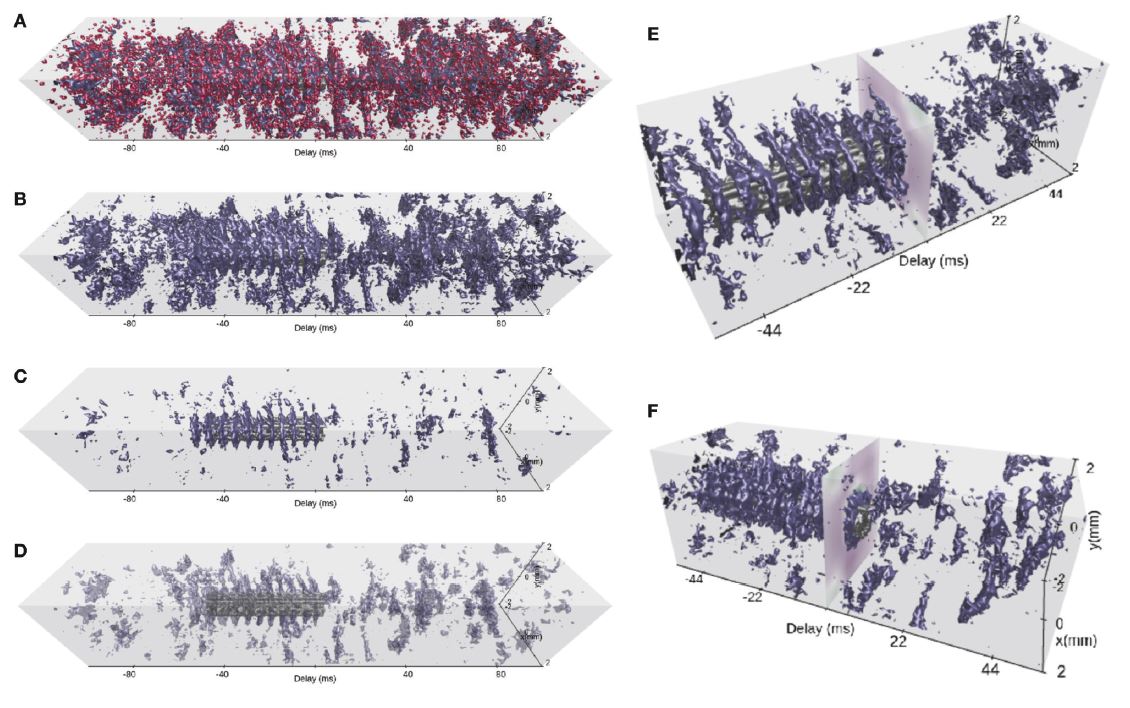

VIOLA : a Multi-Purpose and Web-Based Visualization Tool for Neuronal-Network Simulation Output

Neuronal network models and corresponding computer simulations are invaluable tools to aid the interpretation of the relationship between neuron properties, connectivity, and measured activity in cortical tissue. Spatiotemporal patterns of activity propagating across the cortical surface as observed experimentally can for example be described by neuronal network models with layered geometry and distance-dependent connectivity. In order to cover the surface area captured by today’s experimental techniques and to achieve sufficient self-consistency, such models contain millions of nerve cells. The interpretation of the resulting stream of multi-modal and multi-dimensional simulation data calls for integrating interactive visualization steps into existing simulation-analysis workflows. Here, we present a set of interactive visualization concepts called views for the visual analysis of activity data in topological network models, and a corresponding reference implementation VIOLA (VIsualization Of Layer Activity). The software is a lightweight, open-source, web-based, and platform-independent application combining and adapting modern interactive visualization paradigms, such as coordinated multiple views, for massively parallel neurophysiological data. For a use-case demonstration we consider spiking activity data of a two-population, layered point-neuron network model incorporating distance-dependent connectivity subject to a spatially confined excitation originating from an external population. With the multiple coordinated views, an explorative and qualitative assessment of the spatiotemporal features of neuronal activity can be performed upfront of a detailed quantitative data analysis of speci?c aspects of the data. Interactive multi-view analysis therefore assists existing data Analysis workflows. Furthermore,ongoingeffortsincludingtheEuropeanHumanBrainProjectaim at providing online user portals for integrated model development, simulation, analysis, and provenance tracking, wherein interactive visual analysis tools are one component. Browser-compatible, web-technology based solutions are therefore required. Within this scope, with VIOLA we provide a first prototype.

@ARTICLE{10.3389/fninf.2018.00075,

AUTHOR={Senk, Johanna and Carde, Corto and Hagen, Espen and Kuhlen, Torsten W. and Diesmann, Markus and Weyers, Benjamin},

TITLE={VIOLA—A Multi-Purpose and Web-Based Visualization Tool for Neuronal-Network Simulation Output},

JOURNAL={Frontiers in Neuroinformatics},

VOLUME={12},

PAGES={75},

YEAR={2018},

URL={https://www.frontiersin.org/article/10.3389/fninf.2018.00075},

DOI={10.3389/fninf.2018.00075},

ISSN={1662-5196},

ABSTRACT={Neuronal network models and corresponding computer simulations are invaluable tools to aid the interpretation of the relationship between neuron properties, connectivity and measured activity in cortical tissue. Spatiotemporal patterns of activity propagating across the cortical surface as observed experimentally can for example be described by neuronal network models with layered geometry and distance-dependent connectivity. In order to cover the surface area captured by today's experimental techniques and to achieve sufficient self-consistency, such models contain millions of nerve cells. The interpretation of the resulting stream of multi-modal and multi-dimensional simulation data calls for integrating interactive visualization steps into existing simulation-analysis workflows. Here, we present a set of interactive visualization concepts called views for the visual analysis of activity data in topological network models, and a corresponding reference implementation VIOLA (VIsualization Of Layer Activity). The software is a lightweight, open-source, web-based and platform-independent application combining and adapting modern interactive visualization paradigms, such as coordinated multiple views, for massively parallel neurophysiological data. For a use-case demonstration we consider spiking activity data of a two-population, layered point-neuron network model incorporating distance-dependent connectivity subject to a spatially confined excitation originating from an external population. With the multiple coordinated views, an explorative and qualitative assessment of the spatiotemporal features of neuronal activity can be performed upfront of a detailed quantitative data analysis of specific aspects of the data. Interactive multi-view analysis therefore assists existing data analysis workflows. Furthermore, ongoing efforts including the European Human Brain Project aim at providing online user portals for integrated model development, simulation, analysis and provenance tracking, wherein interactive visual analysis tools are one component. Browser-compatible, web-technology based solutions are therefore required. Within this scope, with VIOLA we provide a first prototype.}

}

Immersive Analytics Applications in Life and Health Sciences

Life and health sciences are key application areas for immersive analytics. This spans a broad range including medicine (e.g., investigations in tumour boards), pharmacology (e.g., research of adverse drug reactions), biology (e.g., immersive virtual cells) and ecology (e.g., analytics of animal behaviour). We present a brief overview of general applications of immersive analytics in the life and health sciences, and present a number of applications in detail, such as immersive analytics in structural biology, in medical image analytics, in neurosciences, in epidemiology, in biological network analysis and for virtual cells.

@Inbook{Czauderna2018,

author="Czauderna, Tobias

and Haga, Jason

and Kim, Jinman

and Klapperst{\"u}ck, Matthias

and Klein, Karsten

and Kuhlen, Torsten

and Oeltze-Jafra, Steffen

and Sommer, Bj{\"o}rn

and Schreiber, Falk",

editor="Marriott, Kim

and Schreiber, Falk

and Dwyer, Tim

and Klein, Karsten

and Riche, Nathalie Henry

and Itoh, Takayuki

and Stuerzlinger, Wolfgang

and Thomas, Bruce H.",

title="Immersive Analytics Applications in Life and Health Sciences",

bookTitle="Immersive Analytics",

year="2018",

publisher="Springer International Publishing",

address="Cham",

pages="289--330",

abstract="Life and health sciences are key application areas for immersive analytics. This spans a broad range including medicine (e.g., investigations in tumour boards), pharmacology (e.g., research of adverse drug reactions), biology (e.g., immersive virtual cells) and ecology (e.g., analytics of animal behaviour). We present a brief overview of general applications of immersive analytics in the life and health sciences, and present a number of applications in detail, such as immersive analytics in structural biology, in medical image analytics, in neurosciences, in epidemiology, in biological network analysis and for virtual cells.",

isbn="978-3-030-01388-2",

doi="10.1007/978-3-030-01388-2_10",

url="https://doi.org/10.1007/978-3-030-01388-2_10"

}

Exploring Immersive Analytics for Built Environments

This chapter overviews the application of immersive analytics to simulations of built environments through three distinct case studies. The first case study examines an immersive analytics approach based upon the concept of “Virtual Production Intelligence” for virtual prototyping tools throughout the planning phase of complete production sites. The second study addresses the 3D simulation of an extensive urban area and the attendant immersive analytic considerations in an interactive model of a sustainable city. The third study reviews how immersive analytic overlays have been applied for virtual heritage in the reconstruction and crowd simulation of the medieval Cambodian temple complex of Angkor Wat.

@Inbook{Chandler2018,

author="Chandler, Tom

and Morgan, Thomas

and Kuhlen, Torsten Wolfgang",

editor="Marriott, Kim

and Schreiber, Falk

and Dwyer, Tim

and Klein, Karsten

and Riche, Nathalie Henry

and Itoh, Takayuki

and Stuerzlinger, Wolfgang

and Thomas, Bruce H.",

title="Exploring Immersive Analytics for Built Environments",

bookTitle="Immersive Analytics",

year="2018",

publisher="Springer International Publishing",

address="Cham",

pages="331--357",

abstract="This chapter overviews the application of immersive analytics to simulations of built environments through three distinct case studies. The first case study examines an immersive analytics approach based upon the concept of ``Virtual Production Intelligence'' for virtual prototyping tools throughout the planning phase of complete production sites. The second study addresses the 3D simulation of an extensive urban area (191 km{\$}{\$}^2{\$}{\$}) and the attendant immersive analytic considerations in an interactive model of a sustainable city. The third study reviews how immersive analytic overlays have been applied for virtual heritage in the reconstruction and crowd simulation of the medieval Cambodian temple complex of Angkor Wat.",

isbn="978-3-030-01388-2",

doi="10.1007/978-3-030-01388-2_11",

url="https://doi.org/10.1007/978-3-030-01388-2_11"

}

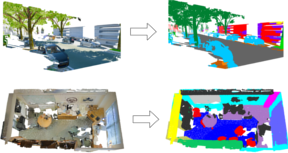

Know What Your Neighbors Do: 3D Semantic Segmentation of Point Clouds

In this paper, we present a deep learning architecture which addresses the problem of 3D semantic segmentation of unstructured point clouds. Compared to previous work, we introduce grouping techniques which define point neighborhoods in the initial world space and the learned feature space. Neighborhoods are important as they allow to compute local or global point features depending on the spatial extend of the neighborhood. Additionally, we incorporate dedicated loss functions to further structure the learned point feature space: the pairwise distance loss and the centroid loss. We show how to apply these mechanisms to the task of 3D semantic segmentation of point clouds and report state-of-the-art performance on indoor and outdoor datasets.

@inproceedings{3dsemseg_ECCVW18,

author = {Francis Engelmann and

Theodora Kontogianni and

Jonas Schult and

Bastian Leibe},

title = {Know What Your Neighbors Do: 3D Semantic Segmentation of Point Clouds},

booktitle = {{IEEE} European Conference on Computer Vision, GMDL Workshop, {ECCV}},

year = {2018}

}

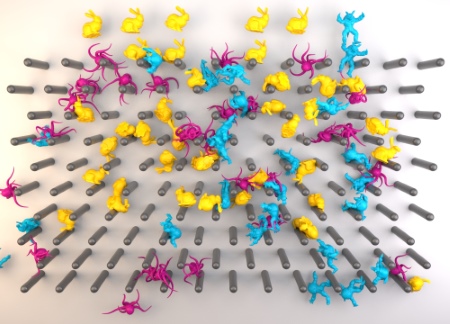

Fast Corotated FEM using Operator Splitting

In this paper we present a novel operator splitting approach for corotated FEM simulations. The deformation energy of the corotated linear material model consists of two additive terms. The first term models stretching in the individual spatial directions and the second term describes resistance to volume changes. By formulating the backward Euler time integration scheme as an optimization problem, we show that the first term is invariant to rotations. This allows us to use an operator splitting approach and to solve both terms individually with different numerical methods. The stretching part is solved accurately with an optimization integrator, which can be done very efficiently because the system matrix is constant over time such that its Cholesky factorization can be precomputed. The volume term is solved approximately by using the compliant constraints method and Gauss-Seidel iterations. Further, we introduce the analytic polar decomposition which allows us to speed up the extraction of the rotational part of the deformation gradient and to recover inverted elements. Finally, this results in an extremely fast and robust simulation method with high visual quality that outperforms standard corotated FEMs by more than two orders of magnitude and even the fast but inaccurate PBD and shape matching methods by more than one order of magnitude without having their typical drawbacks. This enables a very efficient simulation of complex scenes containing more than a million elements.

@article{KKB2018,

author = {Tassilo Kugelstadt and Dan Koschier and Jan Bender},

title = {Fast Corotated FEM using Operator Splitting},

year = {2018},

journal = {Computer Graphics Forum (SCA)},

volume = {37},

number = {8}

}

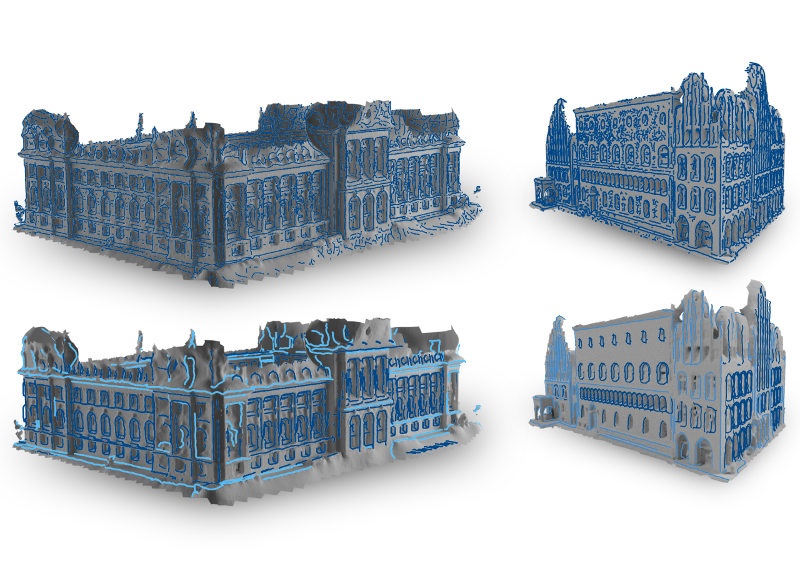

Feature Curve Co-Completion in Noisy Data

Feature curves on 3D shapes provide important hints about significant parts of the geometry and reveal their underlying structure. However, when we process real world data, automatically detected feature curves are affected by measurement uncertainty, missing data, and sampling resolution, leading to noisy, fragmented, and incomplete feature curve networks. These artifacts make further processing unreliable. In this paper we analyze the global co-occurrence information in noisy feature curve networks to fill in missing data and suppress weakly supported feature curves. For this we propose an unsupervised approach to find meaningful structure within the incomplete data by detecting multiple occurrences of feature curve configurations (co-occurrence analysis). We cluster and merge these into feature curve templates, which we leverage to identify strongly supported feature curve segments as well as to complete missing data in the feature curve network. In the presence of significant noise, previous approaches had to resort to user input, while our method performs fully automatic feature curve co-completion. Finding feature reoccurrences however, is challenging since naive feature curve comparison fails in this setting due to fragmentation and partial overlaps of curve segments. To tackle this problem we propose a robust method for partial curve matching. This provides us with the means to apply symmetry detection methods to identify co-occurring configurations. Finally, Bayesian model selection enables us to detect and group re-occurrences that describe the data well and with low redundancy.

@inproceedings{gehre2018feature,

title={Feature Curve Co-Completion in Noisy Data},

author={Gehre, Anne and Lim, Isaak and Kobbelt, Leif},

booktitle={Computer Graphics Forum},

volume={37},

number={2},

year={2018},

organization={Wiley Online Library}

}

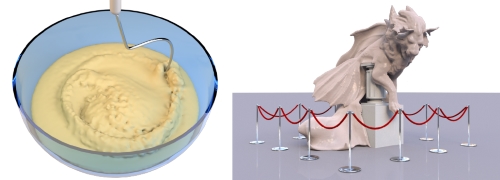

A Physically Consistent Implicit Viscosity Solver for SPH Fluids

In this paper, we present a novel physically consistent implicit solver for the simulation of highly viscous fluids using the Smoothed Particle Hydrodynamics (SPH) formalism. Our method is the result of a theoretical and practical in-depth analysis of the most recent implicit SPH solvers for viscous materials. Based on our findings, we developed a list of requirements that are vital to produce a realistic motion of a viscous fluid. These essential requirements include momentum conservation, a physically meaningful behavior under temporal and spatial refinement, the absence of ghost forces induced by spurious viscosities and the ability to reproduce complex physical effects that can be observed in nature. On the basis of several theoretical analyses, quantitative academic comparisons and complex visual experiments we show that none of the recent approaches is able to satisfy all requirements. In contrast, our proposed method meets all demands and therefore produces realistic animations in highly complex scenarios. We demonstrate that our solver outperforms former approaches in terms of physical accuracy and memory consumption while it is comparable in terms of computational performance. In addition to the implicit viscosity solver, we present a method to simulate melting objects. Therefore, we generalize the viscosity model to a spatially varying viscosity field and provide an SPH discretization of the heat equation.

@article{WKBB2018,

author = {Marcel Weiler and Dan Koschier and Magnus Brand and Jan Bender},

title = {A Physically Consistent Implicit Viscosity Solver for SPH Fluids},

year = {2018},

journal = {Computer Graphics Forum (Eurographics)},

volume = {37},

number = {2}

}

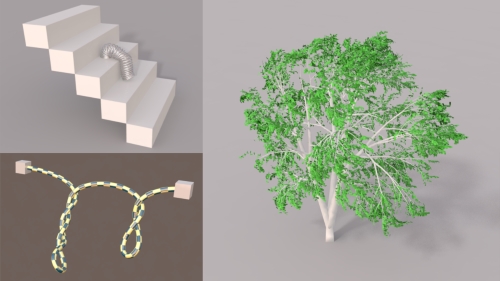

Direct Position-Based Solver for Stiff Rods

In this paper, we present a novel direct solver for the efficient simulation of stiff, inextensible elastic rods within the Position-Based Dynamics (PBD) framework. It is based on the XPBD algorithm, which extends PBD to simulate elastic objects with physically meaningful material parameters. XPBD approximates an implicit Euler integration and solves the system of non-linear equations using a non-linear Gauss-Seidel solver. However, this solver requires many iterations to converge for complex models and if convergence is not reached, the material becomes too soft. In contrast we use Newton iterations in combination with our direct solver to solve the non-linear equations which significantly improves convergence by solving all constraints of an acyclic structure (tree), simultaneously. Our solver only requires a few Newton iterations to achieve high stiffness and inextensibility. We model inextensible rods and trees using rigid segments connected by constraints. Bending and twisting constraints are derived from the well-established Cosserat model. The high performance of our solver is demonstrated in highly realistic simulations of rods consisting of multiple ten-thousand segments. In summary, our method allows the efficient simulation of stiff rods in the Position-Based Dynamics framework with a speedup of two orders of magnitude compared to the original XPBD approach.

@article{DKWB2018,

author = {Crispin Deul and Tassilo Kugelstadt and Marcel Weiler and Jan Bender},

title = {Direct Position-Based Solver for Stiff Rods},

year = {2018},

journal = {Computer Graphics Forum},

volume = {37},

number = {6},

pages = {313-324},

keywords = {physically based animation, animation, Computing methodologies → Physical simulation},

doi = {10.1111/cgf.13326},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1111/cgf.13326},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.13326},

}

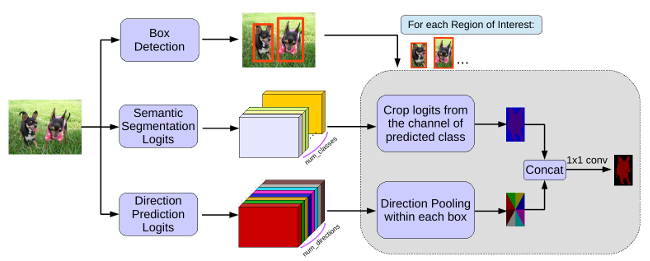

MaskLab: Instance Segmentation by Refining Object Detection with Semantic and Direction Features

In this work, we tackle the problem of instance segmentation, the task of simultaneously solving object detection and semantic segmentation. Towards this goal, we present a model, called MaskLab, which produces three outputs: box detection, semantic segmentation, and direction prediction. Building on top of the Faster-RCNN object detector, the predicted boxes provide accurate localization of object instances. Within each region of interest, MaskLab performs foreground/background segmentation by combining semantic and direction prediction. Semantic segmentation assists the model in distinguishing between objects of different semantic classes including background, while the direction prediction, estimating each pixel's direction towards its corresponding center, allows separating instances of the same semantic class. Moreover, we explore the effect of incorporating recent successful methods from both segmentation and detection (i.e. atrous convolution and hypercolumn). Our proposed model is evaluated on the COCO instance segmentation benchmark and shows comparable performance with other state-of-art models.

@article{Chen18CVPR,

title = {{MaskLab: Instance Segmentation by Refining Object Detection with Semantic and Direction Features}},

author = {Chen, Liang-Chieh and Hermans, Alexander and Papandreou, George and Schroff, Florian and Wang, Peng and Adam, Hartwig},

journal = {{IEEE Conference on Computer Vision and Pattern Recognition (CVPR'18)}},,

year = {2018}

}

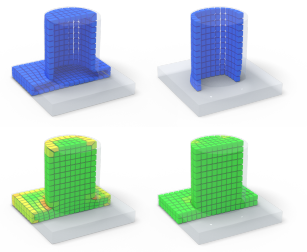

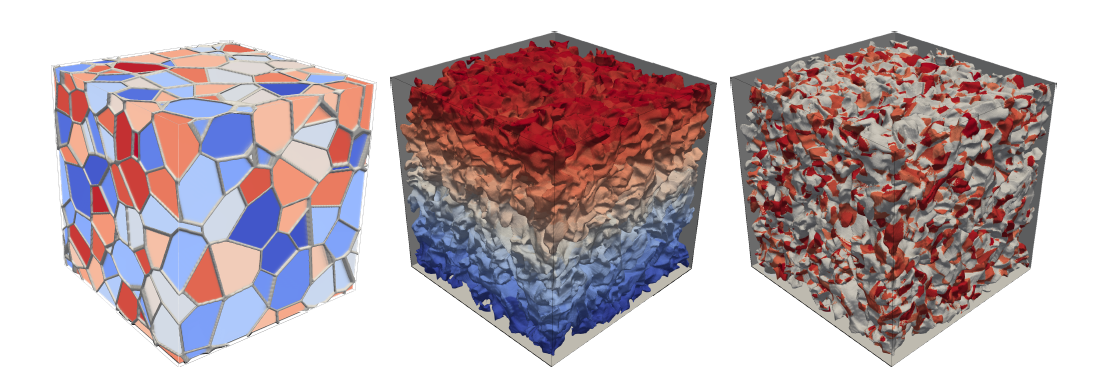

Selective Padding for Polycube-based Hexahedral Meshing

Abstract Hexahedral meshes generated from polycube mapping often exhibit a low number of singularities but also poor-quality elements located near the surface. It is thus necessary to improve the overall mesh quality, in terms of the minimum scaled Jacobian (MSJ) or average SJ (ASJ). Improving the quality may be obtained via global padding (or pillowing), which pushes the singularities inside by adding an extra layer of hexahedra on the entire domain boundary. Such a global padding operation suffers from a large increase of complexity, with unnecessary hexahedra added. In addition, the quality of elements near the boundary may decrease. We propose a novel optimization method which inserts sheets of hexahedra so as to perform selective padding, where it is most needed for improving the mesh quality. A sheet can pad part of the domain boundary, traverse the domain and form singularities. Our global formulation, based on solving a binary problem, enables us to control the balance between quality improvement, increase of complexity and number of singularities. We show in a series of experiments that our approach increases the MSJ value and preserves (or even improves) the ASJ, while adding fewer hexahedra than global padding.

@article{Cherchi2018SelectivePadding,

author = {Cherchi, G. and Alliez, P. and Scateni, R. and Lyon, M. and Bommes, D.},

title = {Selective Padding for Polycube-Based Hexahedral Meshing},

journal = {Computer Graphics Forum},

volume = {38},

number = {1},

pages = {580-591},

keywords = {computational geometry, modelling, physically based modelling, mesh generation, I.3.5 Computer Graphics: Computational Geometry and Object Modeling—Curve, surface, solid, and object representations},

doi = {https://doi.org/10.1111/cgf.13593},

url = {https://onlinelibrary.wiley.com/doi/abs/10.1111/cgf.13593},

eprint = {https://onlinelibrary.wiley.com/doi/pdf/10.1111/cgf.13593},

abstract = {Abstract Hexahedral meshes generated from polycube mapping often exhibit a low number of singularities but also poor-quality elements located near the surface. It is thus necessary to improve the overall mesh quality, in terms of the minimum scaled Jacobian (MSJ) or average SJ (ASJ). Improving the quality may be obtained via global padding (or pillowing), which pushes the singularities inside by adding an extra layer of hexahedra on the entire domain boundary. Such a global padding operation suffers from a large increase of complexity, with unnecessary hexahedra added. In addition, the quality of elements near the boundary may decrease. We propose a novel optimization method which inserts sheets of hexahedra so as to perform selective padding, where it is most needed for improving the mesh quality. A sheet can pad part of the domain boundary, traverse the domain and form singularities. Our global formulation, based on solving a binary problem, enables us to control the balance between quality improvement, increase of complexity and number of singularities. We show in a series of experiments that our approach increases the MSJ value and preserves (or even improves) the ASJ, while adding fewer hexahedra than global padding.},

year = {2019}

}

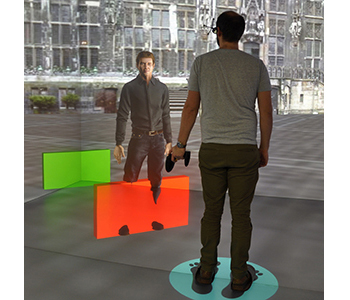

Virtual Humans as Co-Workers: A Novel Methodology to Study Peer Effects

We introduce a novel methodology to study peer effects. Using virtual reality technology, we create a naturalistic work setting in an immersive virtual environment where we embed a computer-generated virtual human as the co-worker of a human subject, both performing a sorting task at a conveyor belt. In our setup, subjects observe the virtual peer, while the virtual human is not observing them. In two treatments, human subjects observe either a low productive or a high productive virtual peer. We find that human subjects rate their presence feeling of \being there" in the immersive virtual environment as natural. Subjects also recognize that virtual peers in our two treatments showed different productivities. We do not find a general treatment effect on productivity. However, we find that competitive subjects display higher performance when they are in the presence of a highly productive peer - compared to when they observe a low productive peer. We use tracking data to learn about the subjects' body movements. Analyzing hand and head data, we show that competitive subjects are more careful in the sorting task than non-competitive subjects. We also discuss some methodological issues related to virtual reality experiments.

@article{gurerk2018,

title={{Virtual Humans as Co-Workers: A Novel Methodology to Study Peer Effects}},

author={G{\"u}rerk, Ozg{\"u}r and B{\"o}nsch, Andrea and Kittsteiner, Thomas and Staffeldt, Andreas},

year={2018},

journal = {{Journal of Behavioral and Experimental Economics}},

issn = {2214-8043},

doi = {https://doi.org/10.1016/j.socec.2018.11.003},

url = {http://www.sciencedirect.com/science/article/pii/S221480431830140X}

}

PReMVOS: Proposal-generation, Refinement and Merging for the DAVIS Challenge on Video Object Segmentation 2018

We address semi-supervised video object segmentation, the task of automatically generating accurate and consistent pixel masks for objects in a video sequence, given the first-frame ground truth annotations. Towards this goal, we present the PReMVOS algorithm (Proposal-generation, Refinement and Merging for Video Object Segmentation). This method involves generating coarse object proposals using a Mask R-CNN like object detector, followed by a refinement network that produces accurate pixel masks for each proposal. We then select and link these proposals over time using a merging algorithm that takes into account an objectness score, the optical flow warping, and a Re-ID feature embedding vector for each proposal. We adapt our networks to the target video domain by fine-tuning on a large set of augmented images generated from the first-frame ground truth. Our approach surpasses all previous state-of-the-art results on the DAVIS 2017 video object segmentation benchmark and achieves first place in the DAVIS 2018 Video Object Segmentation Challenge with a mean of J & F score of 74.7.

@article{Luiten18CVPRW,

author = {Jonathon Luiten and Paul Voigtlaender and Bastian Leibe},

title = {{PReMVOS: Proposal-generation, Refinement and Merging for the DAVIS Challenge on Video Object Segmentation 2018}},

journal = {The 2018 DAVIS Challenge on Video Object Segmentation - CVPR Workshops},

year = {2018}

}

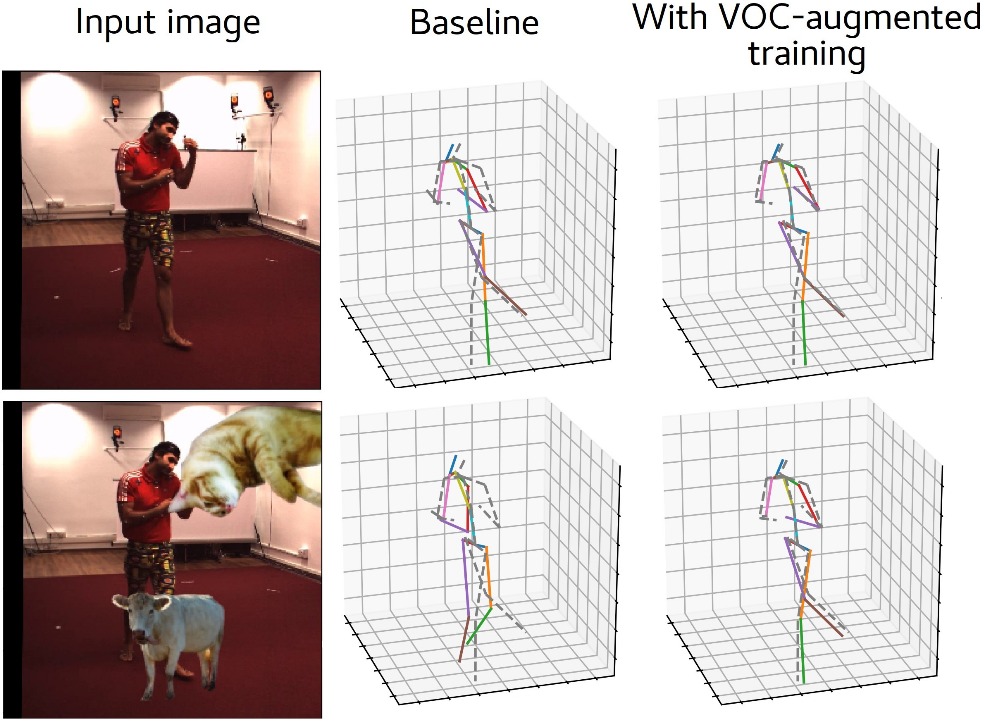

How Robust is 3D Human Pose Estimation to Occlusion?

Occlusion is commonplace in realistic human-robot shared environments, yet its effects are not considered in standard 3D human pose estimation benchmarks. This leaves the question open: how robust are state-of-the-art 3D pose estimation methods against partial occlusions? We study several types of synthetic occlusions over the Human3.6M dataset and find a method with state-of-the-art benchmark performance to be sensitive even to low amounts of occlusion. Addressing this issue is key to progress in applications such as collaborative and service robotics. We take a first step in this direction by improving occlusion-robustness through training data augmentation with synthetic occlusions. This also turns out to be an effective regularizer that is beneficial even for non-occluded test cases.

@inproceedings{Sarandi18IROSW,

title={How Robust is {3D} Human Pose Estimation to Occlusion?},

author={S\'ar\'andi, Istv\'an and Linder, Timm and Arras, Kai O. and Leibe, Bastian},

booktitle={IEEE/RSJ International Conference on Intelligent Robots and Systems Workshops (IROSW)},

year={2018}

}

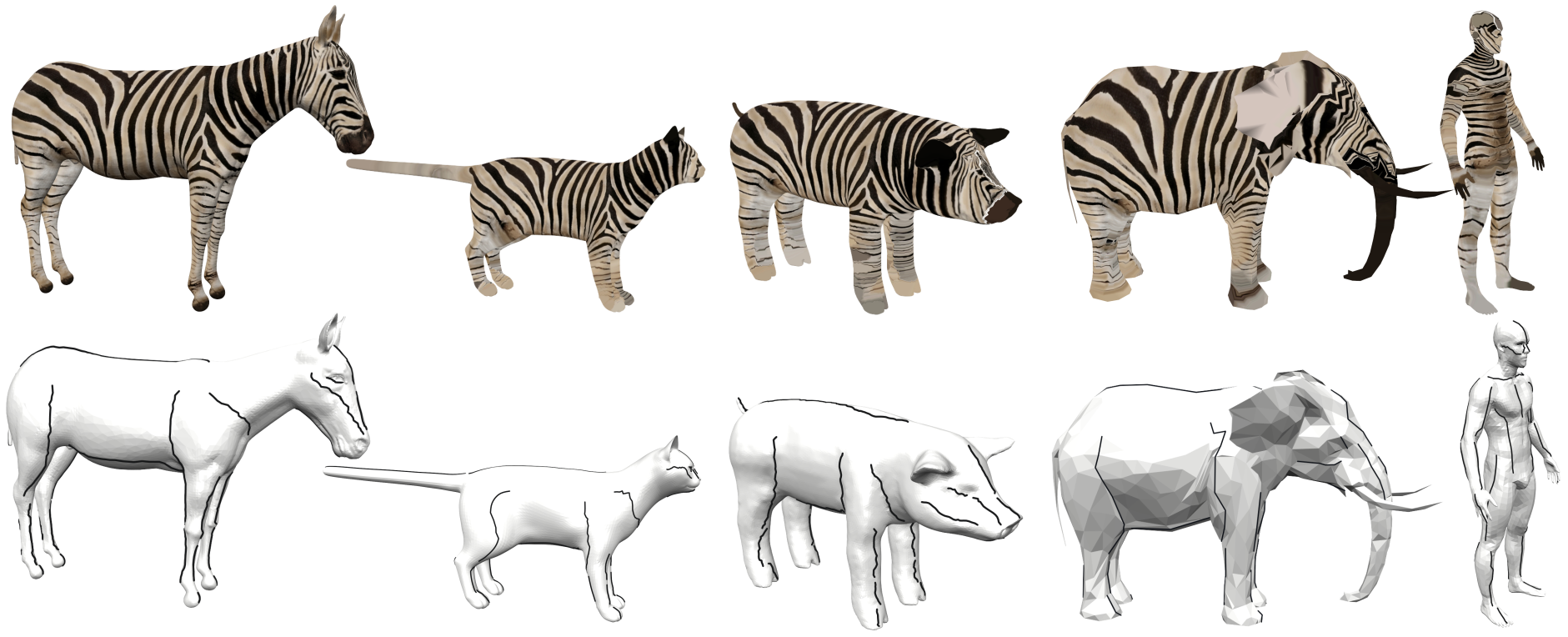

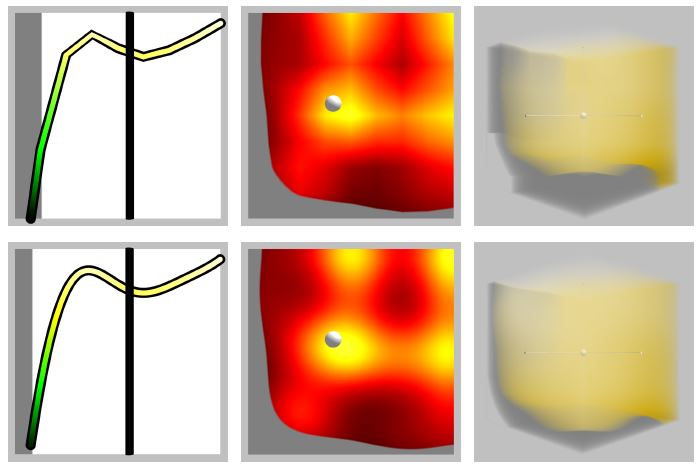

Interactive Curve Constrained Functional Maps

Functional maps have gained popularity as a versatile framework for representing intrinsic correspondence between 3D shapes using algebraic machinery. A key ingredient for this framework is the ability to find pairs of corresponding functions (typically, feature descriptors) across the shapes. This is a challenging problem on its own, and when the shapes are strongly non-isometric, nearly impossible to solve automatically. In this paper, we use feature curve correspondences to provide flexible abstractions of semantically similar parts of non-isometric shapes. We design a user interface implementing an interactive process for constructing shape correspondence, allowing the user to update the functional map at interactive rates by introducing feature curve correspondences. We add feature curve preservation constraints to the functional map framework and propose an efficient numerical method to optimize the map with immediate feedback. Experimental results show that our approach establishes correspondences between geometrically diverse shapes with just a few clicks.

@article{Gehre:2018:InteractiveFunctionalMaps,

author = "Gehre, Anne and Bronstein, Michael and Kobbelt, Leif and Solomon, Justin",

title = "Interactive Curve Constrained Functional Maps",

journal = "Computer Graphics Forum",

volume = 37,

number = 5,

year = 2018

}

Interactive Visual Analysis of Multi-dimensional Metamodels

In the simulation of manufacturing processes, complex models are used to examine process properties. To save computation time, so-called metamodels serve as surrogates for the original models. Metamodels are inherently difficult to interpret, because they resemble multi-dimensional functions f : Rn -> Rm that map configuration parameters to production criteria. We propose a multi-view visualization application called memoSlice that composes several visualization techniques, specially adapted to the analysis of metamodels. With our application, we enable users to improve their understanding of a metamodel, but also to easily optimize processes. We put special attention on providing a high level of interactivity by realizing specialized parallelization techniques to provide timely feedback on user interactions. In this paper we outline these parallelization techniques and demonstrate their effectivity by means of micro and high level measurements.

@inproceedings {pgv.20181098,

booktitle = {Eurographics Symposium on Parallel Graphics and Visualization},

editor = {Hank Childs and Fernando Cucchietti},

title = {{Interactive Visual Analysis of Multi-dimensional Metamodels}},

author = {Gebhardt, Sascha and Pick, Sebastian and Hentschel, Bernd and Kuhlen, Torsten Wolfgang},

year = {2018},

publisher = {The Eurographics Association},

ISSN = {1727-348X},

ISBN = {978-3-03868-054-3},

DOI = {10.2312/pgv.20181098}

}

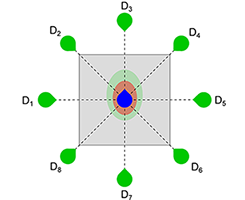

Social VR: How Personal Space is Affected by Virtual Agents’ Emotions

Personal space (PS), the flexible protective zone maintained around oneself, is a key element of everyday social interactions. It, e.g., affects people's interpersonal distance and is thus largely involved when navigating through social environments. However, the PS is regulated dynamically, its size depends on numerous social and personal characteristics and its violation evokes different levels of discomfort and physiological arousal. Thus, gaining more insight into this phenomenon is important.

We contribute to the PS investigations by presenting the results of a controlled experiment in a CAVE, focusing on German males in the age of 18 to 30 years. The PS preferences of 27 participants have been sampled while they were approached by either a single embodied, computer-controlled virtual agent (VA) or by a group of three VAs. In order to investigate the influence of a VA's emotions, we altered their facial expression between angry and happy. Our results indicate that the emotion as well as the number of VAs approaching influence the PS: larger distances are chosen to angry VAs compared to happy ones; single VAs are allowed closer compared to the group. Thus, our study is a foundation for social and behavioral studies investigating PS preferences.

@InProceedings{Boensch2018c,

author = {Andrea B\"{o}nsch and Sina Radke and Heiko Overath and Laura M. Asch\'{e} and Jonathan Wendt and Tom Vierjahn and Ute Habel and Torsten W. Kuhlen},

title = {{Social VR: How Personal Space is Affected by Virtual Agents’ Emotions}},

booktitle = {Proceedings of the IEEE Conference on Virtual Reality and 3D User Interfaces (VR) 2018},

year = {2018}

}

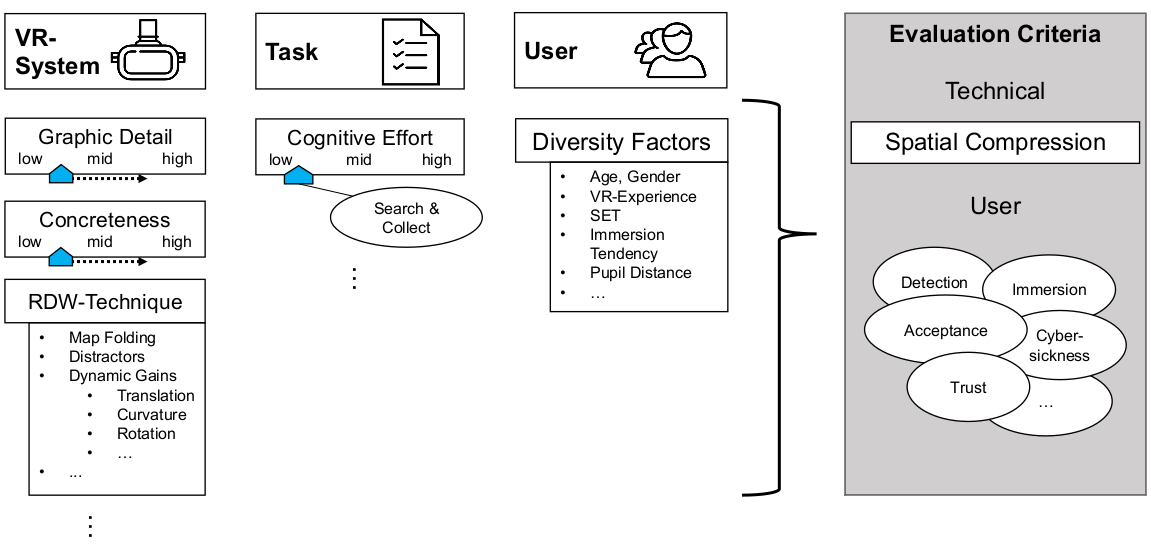

You Spin my Head Right Round: Threshold of Limited Immersion for Rotation Gains in Redirected Walking

In virtual environments, the space that can be explored by real walking is limited by the size of the tracked area. To enable unimpeded walking through large virtual spaces in small real-world surroundings, redirection techniques are used. These unnoticeably manipulate the user’s virtual walking trajectory. It is important to know how strongly such techniques can be applied without the user noticing the manipulation—or getting cybersick. Previously, this was estimated by measuring a detection threshold (DT) in highly-controlled psychophysical studies, which experimentally isolate the effect but do not aim for perceived immersion in the context of VR applications. While these studies suggest that only relatively low degrees of manipulation are tolerable, we claim that, besides establishing detection thresholds, it is important to know when the user’s immersion breaks. We hypothesize that the degree of unnoticed manipulation is significantly different from the detection threshold when the user is immersed in a task. We conducted three studies: a) to devise an experimental paradigm to measure the threshold of limited immersion (TLI), b) to measure the TLI for slowly decreasing and increasing rotation gains, and c) to establish a baseline of cybersickness for our experimental setup. For rotation gains greater than 1.0, we found that immersion breaks quite late after the gain is detectable. However, for gains lesser than 1.0, some users reported a break of immersion even before established detection thresholds were reached. Apparently, the developed metric measures an additional quality of user experience. This article contributes to the development of effective spatial compression methods by utilizing the break of immersion as a benchmark for redirection techniques.

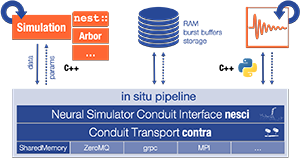

Streaming Live Neuronal Simulation Data into Visualization and Analysis

Neuroscientists want to inspect the data their simulations are producing while these are still running. This will on the one hand save them time waiting for results and therefore insight. On the other, it will allow for more efficient use of CPU time if the simulations are being run on supercomputers. If they had access to the data being generated, neuroscientists could monitor it and take counter-actions, e.g., parameter adjustments, should the simulation deviate too much from in-vivo observations or get stuck.

As a first step toward this goal, we devise an in situ pipeline tailored to the neuroscientific use case. It is capable of recording and transferring simulation data to an analysis/visualization process, while the simulation is still running. The developed libraries are made publicly available as open source projects. We provide a proof-of-concept integration, coupling the neuronal simulator NEST to basic 2D and 3D visualization.

@InProceedings{10.1007/978-3-030-02465-9_18,

author="Oehrl, Simon

and M{\"u}ller, Jan

and Schnathmeier, Jan

and Eppler, Jochen Martin

and Peyser, Alexander

and Plesser, Hans Ekkehard

and Weyers, Benjamin

and Hentschel, Bernd

and Kuhlen, Torsten W.

and Vierjahn, Tom",

editor="Yokota, Rio

and Weiland, Mich{\`e}le

and Shalf, John

and Alam, Sadaf",

title="Streaming Live Neuronal Simulation Data into Visualization and Analysis",

booktitle="High Performance Computing",

year="2018",

publisher="Springer International Publishing",

address="Cham",

pages="258--272",

abstract="Neuroscientists want to inspect the data their simulations are producing while these are still running. This will on the one hand save them time waiting for results and therefore insight. On the other, it will allow for more efficient use of CPU time if the simulations are being run on supercomputers. If they had access to the data being generated, neuroscientists could monitor it and take counter-actions, e.g., parameter adjustments, should the simulation deviate too much from in-vivo observations or get stuck.",

isbn="978-3-030-02465-9"

}

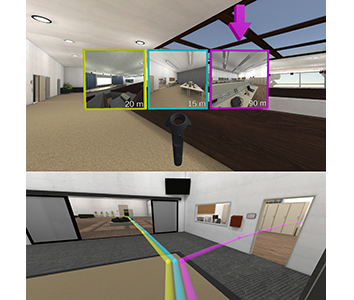

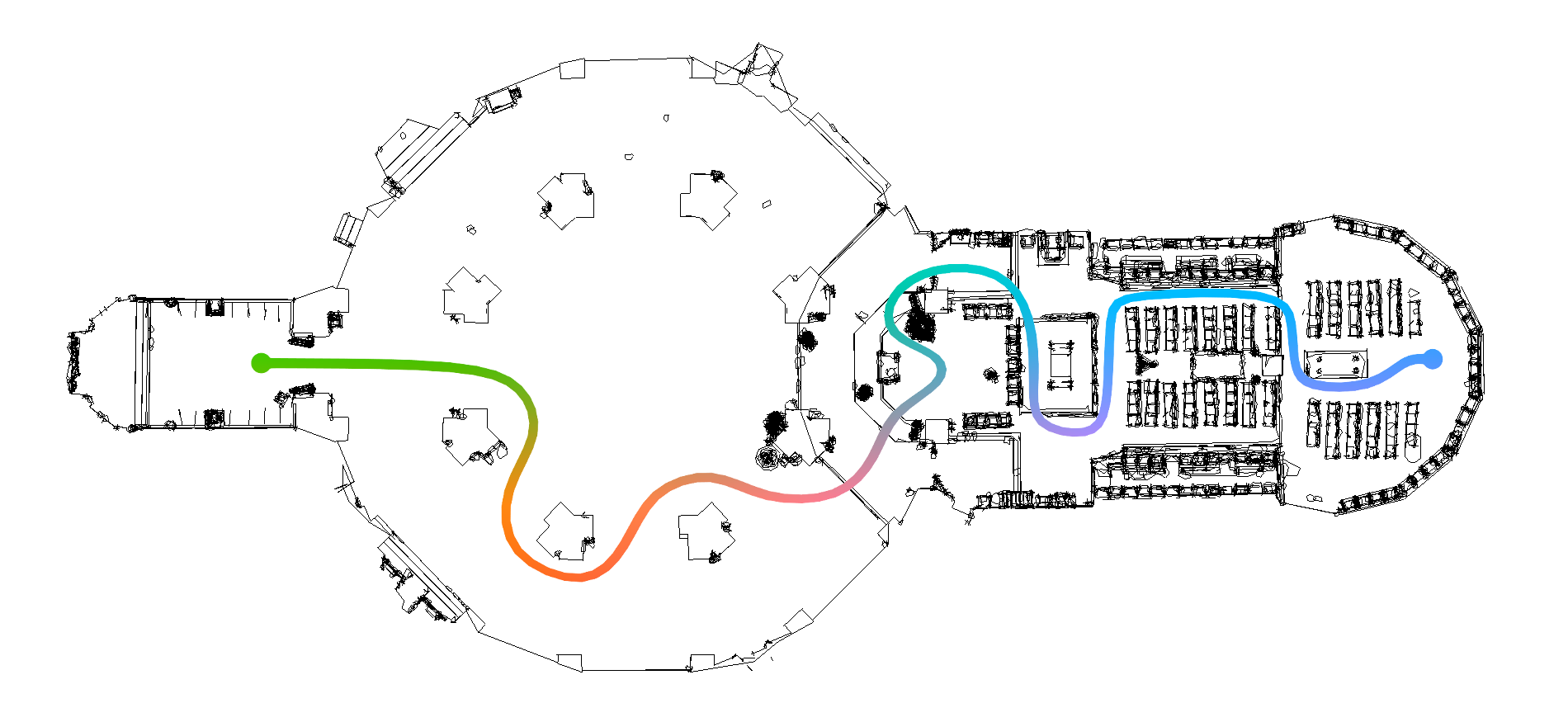

Interactive Exploration Assistance for Immersive Virtual Environments Based on Object Visibility and Viewpoint Quality

During free exploration of an unknown virtual scene, users often miss important parts, leading to incorrect or incomplete environment knowledge and a potential negative impact on performance in later tasks. This is addressed by wayfinding aids such as compasses, maps, or trails, and automated exploration schemes such as guided tours. However, these approaches either do not actually ensure exploration success or take away control from the user.

Therefore, we present an interactive assistance interface to support exploration that guides users to interesting and unvisited parts of the scene upon request, supplementing their own, free exploration. It is based on an automated analysis of object visibility and viewpoint quality and is therefore applicable to a wide range of scenes without human supervision or manual input. In a user study, we found that the approach improves users' knowledge of the environment, leads to a more complete exploration of the scene, and is also subjectively helpful and easy to use.

Does the Directivity of a Virtual Agent’s Speech Influence the Perceived Social Presence?

When interacting and communicating with virtual agents in immersive environments, the agents’ behavior should be believable and authentic. Thereby, one important aspect is a convincing auralizations of their speech. In this work-in progress paper a study design to evaluate the effect of adding directivity to speech sound source on the perceived social presence of a virtual agent is presented. Therefore, we describe the study design and discuss first results of a prestudy as well as consequential improvements of the design.

@InProceedings{Boensch2018b,

author = {Jonathan Wendt and Benjamin Weyers and Andrea B\"{o}nsch and Jonas Stienen and Tom Vierjahn and Michael Vorländer and Torsten W. Kuhlen },

title = {{Does the Directivity of a Virtual Agent’s Speech Influence the Perceived Social Presence?}},

booktitle = {IEEE Virtual Humans and Crowds for Immersive Environments (VHCIE)},

year = {2018}

}

Dynamic Field of View Reduction Related to Subjective Sickness Measures in an HMD-based Data Analysis Task

Various factors influence the degree of cybersickness a user can suffer in an immersive virtual environment, some of which can be controlled without adapting the virtual environment itself. When using HMDs, one example is the size of the field of view. However, the degree to which factors like this can be manipulated without affecting the user negatively in other ways is limited. Another prominent characteristic of cybersickness is that it affects individuals very differently. Therefore, to account for both the possible disruptive nature of alleviating factors and the high interpersonal variance, a promising approach may be to intervene only in cases where users experience discomfort symptoms, and only as much as necessary. Thus, we conducted a first experiment, where the field of view was decreased when people feel uncomfortable, to evaluate the possible positive impact on sickness and negative influence on presence. While we found no significant evidence for any of these possible effects, interesting further results and observations were made.

@InProceedings{zielasko2018,

title={{Dynamic Field of View Reduction Related to Subjective Sickness Measures in an HMD-based Data Analysis Task}},

author={Zielasko, Daniel and Mei{\ss}ner, Alexander and Freitag Sebastian and Weyers, Benjamin and Kuhlen, Torsten W},

booktitle ={Proc. of IEEE Virtual Reality Workshop on Everyday Virtual Reality},

year={2018}

}

Towards Understanding the Influence of a Virtual Agent’s Emotional Expression on Personal Space

The concept of personal space is a key element of social interactions. As such, it is a recurring subject of investigations in the context of research on proxemics. Using virtual-reality-based experiments, we contribute to this area by evaluating the direct effects of emotional expressions of an approaching virtual agent on an individual’s behavioral and physiological responses. As a pilot study focusing on the emotion expressed solely by facial expressions gave promising results, we now present a study design to gain more insight.

@InProceedings{Boensch2018b,

author = {Andrea B\"{o}nsch and Sina Radke and Jonathan Wendt and Tom Vierjahn and Ute Habel and Torsten W. Kuhlen},

title = {{Towards Understanding the Influence of a Virtual Agent’s Emotional Expression on Personal Space}},

booktitle = {IEEE Virtual Humans and Crowds for Immersive Environments (VHCIE)},

year = {2018}

}

Fluid Sketching — Immersive Sketching Based on Fluid Flow

Fluid artwork refers to works of art based on the aesthetics of fluid motion, such as smoke photography, ink injection into water, and paper marbling. Inspired by such types of art, we created Fluid Sketching as a novel medium for creating 3D fluid artwork in immersive virtual environments. It allows artists to draw 3D fluid-like sketches and manipulate them via six degrees of freedom input devices. Different sets of brush strokes are available, varying different characteristics of the fluid. Because of fluid's nature, the diffusion of the drawn fluid sketch is animated, and artists have control over altering the fluid properties and stopping the diffusion process whenever they are satisfied with the current result. Furthermore, they can shape the drawn sketch by directly interacting with it, either with their hand or by blowing into the fluid. We rely on particle advection via curl-noise as a fast procedural method for animating the fluid flow.

@InProceedings{Eroglu2018,

author = {Eroglu, Sevinc and Gebhardt, Sascha and Schmitz, Patric and Rausch, Dominik and Kuhlen, Torsten Wolfgang},

title = {{Fluid Sketching — Immersive Sketching Based on Fluid Flow}},

booktitle = {Proceedings of IEEE Virtual Reality Conference 2018},

year = {2018}

}

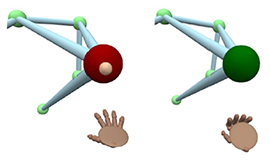

Seamless Hand-Based Remote and Close Range Interaction in IVEs

In this work, we describe a hybrid, hand-based interaction metaphor that makes remote and close objects in an HMD-based immersive virtual environment (IVE) seamlessly accessible. To accomplish this, different existing techniques, such as go-go and HOMER, were combined in a way that aims for generality, intuitiveness, uniformity, and speed. A technique like this is one prerequisite for a successful integration of IVEs to professional everyday applications, such as data analysis workflows.

A Simple Approach to Intrinsic Correspondence Learning on Unstructured 3D Meshes

The question of representation of 3D geometry is of vital importance when it comes to leveraging the recent advances in the field of machine learning for geometry processing tasks. For common unstructured surface meshes state-of-the-art methods rely on patch-based or mapping-based techniques that introduce resampling operations in order to encode neighborhood information in a structured and regular manner. We investigate whether such resampling can be avoided, and propose a simple and direct encoding approach. It does not only increase processing efficiency due to its simplicity - its direct nature also avoids any loss in data fidelity. To evaluate the proposed method, we perform a number of experiments in the challenging domain of intrinsic, non-rigid shape correspondence estimation. In comparisons to current methods we observe that our approach is able to achieve highly competitive results.

@InProceedings{lim2018_correspondence_learning,

author = {Lim, Isaak and Dielen, Alexander and Campen, Marcel and Kobbelt, Leif},

title = {A Simple Approach to Intrinsic Correspondence Learning on Unstructured 3D Meshes},

booktitle = {The European Conference on Computer Vision (ECCV) Workshops},

month = {September},

year = {2018}

}

Poster: Complexity Estimation for Feature Tracking Data.

Feature tracking is a method of time-varying data analysis. Due to the complexity of the underlying problem, different feature tracking algorithms have different levels of correctness in certain use cases. However, there is no efficient way to evaluate their performance on simulation data since there is no ground-truth easily obtainable. Synthetic data is a way to ensure a minimum level of correctness, though there are limits to their expressiveness when comparing the results to simulation data. To close this gap, we calculate a synthetic data set and use its results to extract a hypothesis about the algorithm performance that we can apply to simulation data.

@inproceedings{Helmrich2018,

title={Complexity Estimation for Feature Tracking Data.},

author={Helmrich, Dirk N and Schnorr, Andrea and Kuhlen, Torsten W and Hentschel, Bernd},

booktitle={LDAV},

pages={100--101},

year={2018}

}

PReMVOS: Proposal-generation, Refinement and Merging for the YouTube-VOS Challenge on Video Object Segmentation 2018

We evaluate our PReMVOS algorithm [1]2 on the new YouTube-VOS dataset [3] for the task of semi-supervised video object segmentation (VOS). This task consists of automatically generating accurate and consistent pixel masks for multiple objects in a video sequence, given the object’s first-frame ground truth annotations. The new YouTube-VOS dataset and the corresponding challenge, the 1st Large-scale Video Object Segmentation Challenge, provide a much larger scale evaluation than any previous VOS benchmarks. Our method achieves the best results in the 2018 Large-scale Video Object Segmentation Challenge with a J &F overall mean score over both known and unknown categories of 72.2.

@article{Luiten18ECCVW,

author = {Jonathon Luiten and Paul Voigtlaender and Bastian Leibe},

title = {{PReMVOS: Proposal-generation, Refinement and Merging for the YouTube-VOS Challenge on Video Object Segmentation 2018}},

journal = {The 1st Large-scale Video Object Segmentation Challenge - ECCV Workshops},

year = {2018}

}

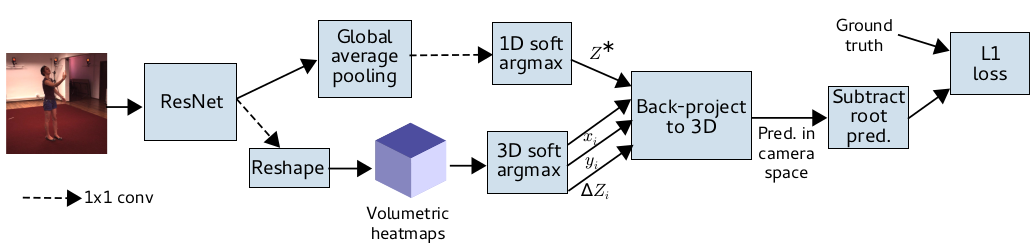

Synthetic Occlusion Augmentation with Volumetric Heatmaps for the 2018 ECCV PoseTrack Challenge on 3D Human Pose Estimation

In this paper we present our winning entry at the 2018 ECCV PoseTrack Challenge on 3D human pose estimation. Using a fully-convolutional backbone architecture, we obtain volumetric heatmaps per body joint, which we convert to coordinates using soft-argmax. Absolute person center depth is estimated by a 1D heatmap prediction head. The coordinates are back-projected to 3D camera space, where we minimize the L1 loss. Key to our good results is the training data augmentation with randomly placed occluders from the Pascal VOC dataset. In addition to reaching first place in the Challenge, our method also surpasses the state-of-the-art on the full Human3.6M benchmark when considering methods that use no extra pose datasets in training. Code for applying synthetic occlusions is availabe at https://github.com/isarandi/synthetic-occlusion.

@article{Sarandi18synthocc,

author = {S\'ar\'andi, Istv\'an and Linder, Timm and Arras, Kai O. and Leibe, Bastian},

title = {Synthetic Occlusion Augmentation with Volumetric Heatmaps for the 2018 {ECCV PoseTrack Challenge} on {3D} Human Pose Estimation},

journal={arXiv preprint arXiv:1809.04987},

year = {2018}

}

Deep Person Detection in 2D Range Data

TL;DR: Extend the DROW dataset to persons, extend the method to include short temporal context, and extensively benchmark all available methods.

Detecting humans is a key skill for mobile robots and intelligent vehicles in a large variety of applications. While the problem is well studied for certain sensory modalities such as image data, few works exist that address this detection task using 2D range data. However, a widespread sensory setup for many mobile robots in service and domestic applications contains a horizontally mounted 2D laser scanner. Detecting people from 2D range data is challenging due to the speed and dynamics of human leg motion and the high levels of occlusion and self-occlusion particularly in crowds of people. While previous approaches mostly relied on handcrafted features, we recently developed the deep learning based wheelchair and walker detector DROW. In this paper, we show the generalization to people, including small modifications that significantly boost DROW's performance. Additionally, by providing a small, fully online temporal window in our network, we further boost our score. We extend the DROW dataset with person annotations, making this the largest dataset of person annotations in 2D range data, recorded during several days in a real-world environment with high diversity. Extensive experiments with three current baseline methods indicate it is a challenging dataset, on which our improved DROW detector beats the current state-of-the-art.

@article{Beyer2018RAL,

title = {{Deep Person Detection in 2D Range Data}},

author = {Beyer, Lucas and Hermans, Alexander and Linder, Timm and Arras, Kai Oliver and Leibe, Bastian},

journal = {IEEE Robotics and Automation Letters},

volume = {3},

number = {3},

pages = {2726--2733}

year = {2018}

}

Get Well Soon! Human Factors’ Influence on Cybersickness after Redirected Walking Exposure in Virtual Reality

Cybersickness poses a crucial threat to applications in the domain of Virtual Reality. Yet, its predictors are insufficiently explored when redirection techniques are applied. Those techniques let users explore large virtual spaces by natural walking in a smaller tracked space. This is achieved by unnoticeably manipulating the user’s virtual walking trajectory. Unfortunately, this also makes the application more prone to cause Cybersickness. We conducted a user study with a semi-structured interview to get quantitative and qualitative insights into this domain. Results show that Cybersickness arises, but also eases ten minutes after the exposure. Quantitative results indicate that a tolerance towards Cybersickness might be related to self-efficacy constructs and therefore learnable or trainable, while qualitative results indicate that users’ endurance of Cybersickness is dependent on symptom factors such as intensity and duration, as well as factors of usage context and motivation. The role of Cybersickness in Virtual Reality environments is discussed in terms of the applicability of redirected walking techniques.

Talk: Streaming Live Neuronal Simulation Data into Visualization and Analysis

Being able to inspect neuronal network simulations while they are running provides new research strategies to neuroscientists as it enables them to perform actions like parameter adjustments in case the simulation performs unexpectedly. This can also save compute resources when such simulations are run on large supercomputers as errors can be detected and corrected earlier saving valuable compute time. This talk presents a prototypical pipeline that enables in-situ analysis and visualization of running simulations.

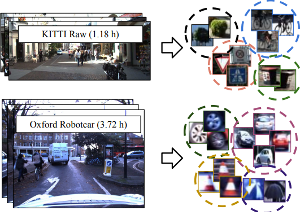

Towards Large-Scale Video Video Object Mining

We propose to leverage a generic object tracker in order to perform object mining in large-scale unlabeled videos, captured in a realistic automotive setting. We present a dataset of more than 360'000 automatically mined object tracks from 10+ hours of video data (560'000 frames) and propose a method for automated novel category discovery and detector learning. In addition, we show preliminary results on using the mined tracks for object detector adaptation.

@article{OsepVoigtlaender18ECCVW,

title={Towards Large-Scale Video Object Mining},

author={Aljo\v{s}a O\v{s}ep and Paul Voigtlaender and Jonathon Luiten and Stefan Breuers and Bastian Leibe},

journal={ECCV 2018 Workshop on Interactive and Adaptive Learning in an Open World},

year={2018}

}

Fluid Sketching: Bringing Ebru Art into VR

In this interactive demo, we present our Fluid Sketching application as an innovative virtual reality-based interpretation of traditional marbling art. By using a particle-based simulation combined with natural, spatial, and multi-modal interaction techniques, we create and extend the original artistic work to build a comprehensive interactive experience. With the interactive demo of Fluid Sketching during Mensch und Computer 2018, we aim at increasing the awareness of paper marbling as traditional type of art and demonstrating the potential of virtual reality as new and innovative digital and artistic medium.

@article{eroglu2018fluid,

title={Fluid Sketching: Bringing Ebru Art into VR},

author={Eroglu, Sevinc and Weyers, Benjamin and Kuhlen, Torsten},

journal={Mensch und Computer 2018-Workshopband},

year={2018},

publisher={Gesellschaft f{\"u}r Informatik eV}

}

Talk: Influence of Emotions on Personal Space Preferences

Personal Space (PS) is regulated dynamically by choosing an appropriate interpersonal distance when navigating through social environments. This key element in social interactions is influenced by numerous social and personal characteristics, e.g., the nature of the relationship between the interaction partners and the other’s sex and age. Moreover, affective contexts and expressions of interaction partners influence PS preferences, evident, e.g., in larger distances to others in threatening situations or when confronted with angry-looking individuals. Given the prominent role of emotional expressions in our everyday social interactions, we investigate how emotions affect PS adaptions.

Real Walking in Virtual Spaces: Visiting the Aachen Cathedral

Real walking is the most natural and intuitive way to navigate the world around us. In Virtual Reality, the limited tracking area of commercially available systems typically does not match the size of the virtual environment we wish to explore. Spatial compression methods enable the user to walk further in the virtual environment than the real tracking bounds permit. This demo gives a glimpse into our ongoing research on spatial compression in VR. Visitors can walk through a realistic model of the Aachen Cathedral within a room-sized tracking area.

@article{schmitz2018real,

title={Real Walking in Virtual Spaces: Visiting the Aachen Cathedral},

author={Schmitz, Patric and Kobbelt, Leif},

journal={Mensch und Computer 2018-Workshopband},

year={2018},

publisher={Gesellschaft f{\"u}r Informatik eV}

}

Near-Constant Density Wireframe Meshes for 3D Printing

In fused deposition modeling (FDM) an object is usually constructed layer-by-layer. Using FDM 3D printers it is however also possible to extrude filament directly in 3D space. Using this technique, a wireframe version of an object can be created by directly printing the wireframe edges into 3D space. This way the print time can be reduced and significant material saving can be achieved. This paper presents a technique for wireframe mesh generation with application in 3D printing. The proposed technique transforms triangle meshes into polygonal meshes, from which the edges can be printed to create the wiremesh. Furthermore, the method is able to generate near-constant density of lines, even in regions parallel to the build platform.

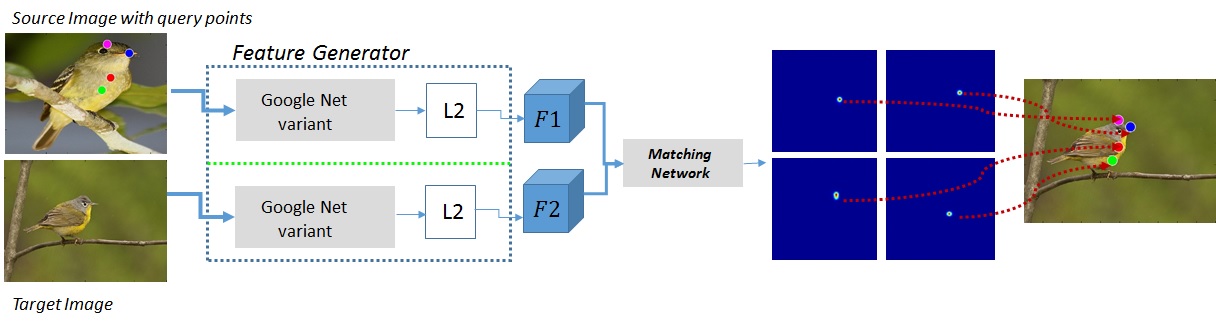

Direct Shot Correspondence Matching

We propose a direct shot method for the task of correspondence matching. Instead of minimizing a loss based on positive and negative pairs, which requires hard-negative mining step for training and nearest neighbor search step for inference, we propose a novel similarity heatmap generator that makes these additional steps obsolete. The similarity heatmap generator efficiently generates peaked similarity heatmaps over the target image for all the query keypoints in a single pass. The matching network can be appended to any standard deep network architecture to make it end-to-end trainable with N-pairs based metric learning and achieves superior performance. We evaluate the proposed method on various correspondence matching datasets and achieve state-of-the-art performance.

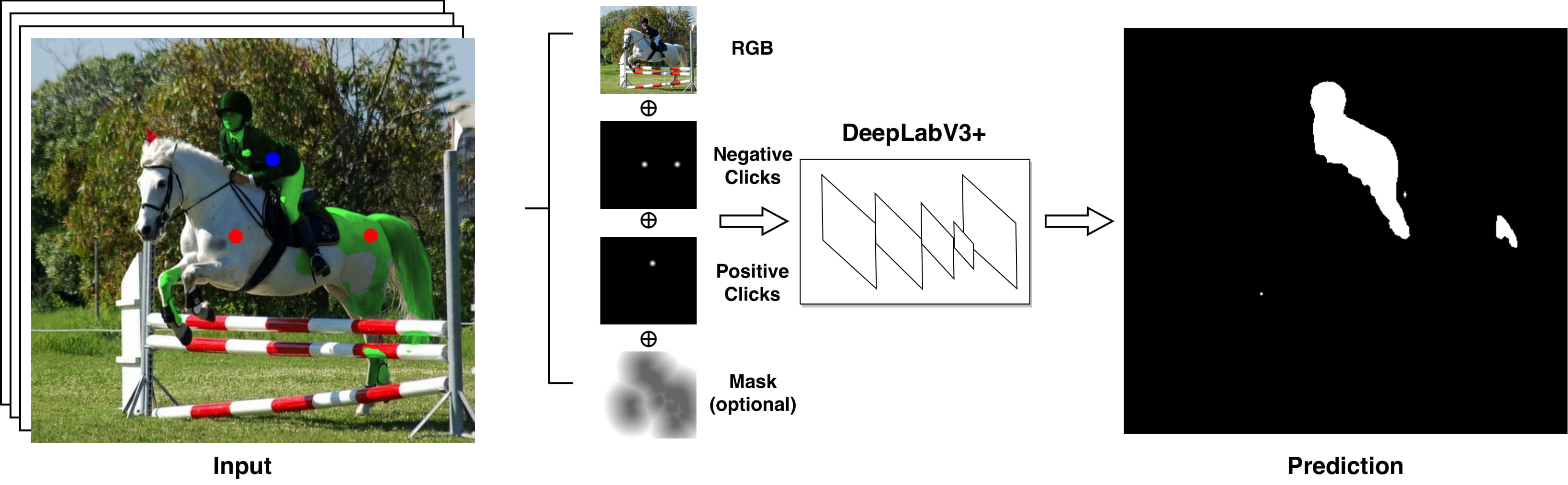

Iteratively Trained Interactive Segmentation

Deep learning requires large amounts of training data to be effective. For the task of object segmentation, manually labeling data is very expensive, and hence interactive methods are needed. Following recent approaches, we develop an interactive object segmentation system which uses user input in the form of clicks as the input to a convolutional network. While previous methods use heuristic click sampling strategies to emulate user clicks during training, we propose a new iterative training strategy. During training, we iteratively add clicks based on the errors of the currently predicted segmentation. We show that our iterative training strategy together with additional improvements to the network architecture results in improved results over the state-of-the-art.

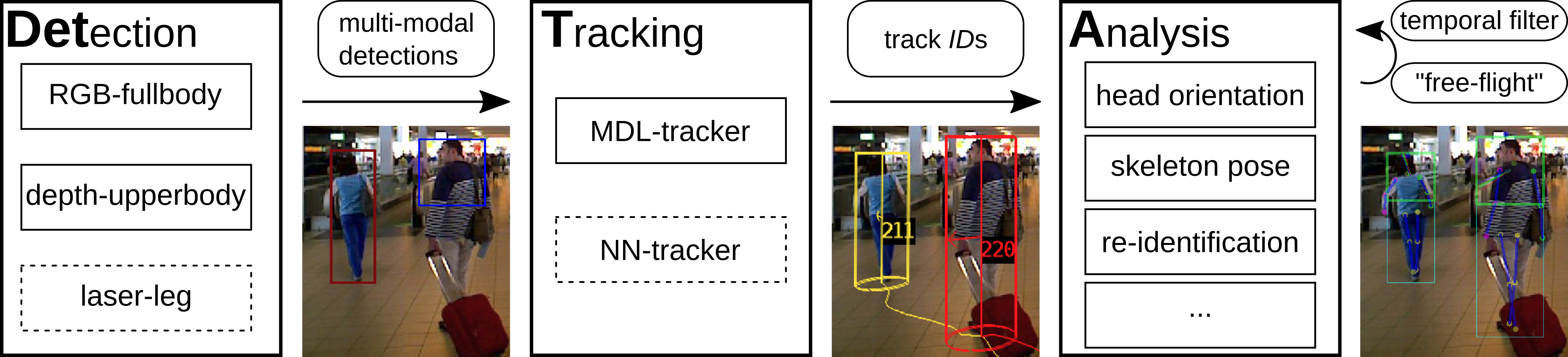

Detection-Tracking for Efficient Person Analysis: The DetTA Pipeline

TL;DR: Detection+Tracking+{head orientation,skeleton} analysis. Smooth per-track enables filtering outliers as well as a "free flight" mode where expensive analysis modules are run with a stride, dramatically increasing runtime performance at almost no loss of prediction quality.

In the past decade many robots were deployed in the wild, and people detection and tracking is an important component of such deployments. On top of that, one often needs to run modules which analyze persons and extract higher level attributes such as age and gender, or dynamic information like gaze and pose. The latter ones are especially necessary for building a reactive, social robot-person interaction.

In this paper, we combine those components in a fully modular detection-tracking-analysis pipeline, called DetTA. We investigate the benefits of such an integration on the example of head and skeleton pose, by using the consistent track ID for a temporal filtering of the analysis modules’ observations, showing a slight improvement in a challenging real-world scenario. We also study the potential of a so-called “free-flight” mode, where the analysis of a person attribute only relies on the filter’s predictions for certain frames. Here, our study shows that this boosts the runtime dramatically, while the prediction quality remains stable. This insight is especially important for reducing power consumption and sharing precious (GPU-)memory when running many analysis components on a mobile platform, especially so in the era of expensive deep learning methods.

@article{BreuersBeyer2018Arxiv,

title = {{Detection-Tracking for Efficient Person Analysis: The DetTA Pipeline}},

author = {Breuers*, Stefan and Beyer*, Lucas and Rafi, Umer and Leibe, Bastian},

journal = {arXiv preprint arXiv:TBD},

year = {2018}

}

Joint Antenna Selection and Phase-Only Beam Using Mixed-Integer Nonlinear Programming

In this paper, we consider the problem of joint antenna selection and analog beamformer design in a downlink single-group multicast network. Our objective is to reduce the hardware requirement by minimizing the number of required phase shifters at the transmitter while fulfilling given quality of constraints. We formulate the problem as an L0 minimization problem and devise a novel branch-and-cut based algorithm to solve the formulated mixed-integer nonlinear program optimally. We also propose a suboptimal heuristic algorithm to solve the above problem with a low computational complexity. Furthermore, the performance of the suboptimal method is evaluated against the developed optimal method, which serves as a benchmark.

@inproceedings{FischerHedgeMatterPesaventoPfetschTillmann2018,

author = {T. Fischer and G. Hedge and F. Matter and M. Pesavento and M. E. Pfetsch and A. M. Tillmann},

title = {{Joint Antenna Selection and Phase-Only Beam Using Mixed-Integer Nonlinear Programming}},

booktitle = {{Proc. WSA 2018}},

pages = {},

year = {2018},

note = {to appear}

}

Large-Scale Object Discovery and Detector Adaptation from Unlabeled Video

We explore object discovery and detector adaptation based on unlabeled video sequences captured from a mobile platform. We propose a fully automatic approach for object mining from video which builds upon a generic object tracking approach. By applying this method to three large video datasets from autonomous driving and mobile robotics scenarios, we demonstrate its robustness and generality. Based on the object mining results, we propose a novel approach for unsupervised object discovery by appearance-based clustering. We show that this approach successfully discovers interesting objects relevant to driving scenarios. In addition, we perform self-supervised detector adaptation in order to improve detection performance on the KITTI dataset for existing categories. Our approach has direct relevance for enabling large-scale object learning for autonomous driving.

@article{OsepVoigtlaender18arxiv,

title={Large-Scale Object Discovery and Detector Adaptation from Unlabeled Video},

author={Aljo\v{s}a O\v{s}ep and Paul Voigtlaender and Jonathon Luiten and Stefan Breuers and Bastian Leibe},

journal={arXiv preprint arXiv:1712.08832},

year={2018}

}

Previous Year (2017)