Publications

Point-Sampled Shape Representations

An Intuitive Framework for Real-Time Freeform Modeling

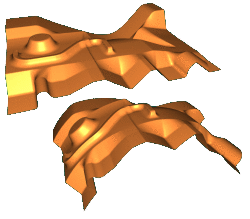

We present a freeform modeling framework for unstructured triangle meshes which is based on constraint shape optimization. The goal is to simplify the user interaction even for quite complex freeform or multiresolution modifications. The user first sets various boundary constraints to define a custom tailored (abstract) basis function which is adjusted to a given design task. The actual modification is then controlled by moving one single 9-dof manipulator object. The technique can handle arbitrary support regions and piecewise boundary conditions with smoothness ranging continuously from C0 to C2. To more naturally adapt the modification to the shape of the support region, the deformed surface can be tuned to bend with anisotropic stiffness. We are able to achieve real-time response in an interactive design session even for complex meshes by precomputing a set of scalar-valued basis functions that correspond to the degrees of freedom of the manipulator by which the user controls the modification.

Optimized Sub-Sampling of Point Sets for Surface Splatting

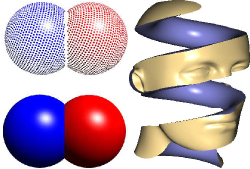

Using surface splats as a rendering primitive has gained increasing attention recently due to its potential for high-performance and high-quality rendering of complex geometric models. However, as with any other rendering primitive, the processing costs are still proportional to the number of primitives that we use to represent a given object. This is why complexity reduction for point-sampled geometry is as important as it is, e.g., for triangle meshes. In this paper we present a new sub-sampling technique for dense point clouds which is speccally adjusted to the particular geometric properties of circular or elliptical surface splats. A global optimization scheme computes an approximately minimal set of splats that covers the entire surface while staying below a globally prescribed maximum error tolerance e. Since our algorithm converts pure point sample data into surface splats with normal vectors and spatial extent, it can also be considered as a surface reconstruction technique which generates a hole-free piecewise linear C^(-1) continuous approximation of the input data. Here we can exploit the higher flexibility of surface splats compared to triangle meshes. Compared to previous work in this area we are able to obtain significantly lower splat numbers for a given error tolerance.

Best student paper award!

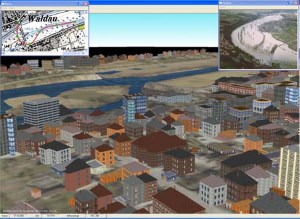

HW3D: A tool for interactive real-time 3D visualization in GIS supported flood modelling

Large numerical calculations are made to get a prediction what damage a possible flood would cause. These results of the simulation are used to prevent further flood catastrophes. The more realistic a visualization of these calculations is the more precaution will be taken by the local authority and the citizens. This paper describes a tool and techniques to get a realistic looking, three-dimensional, easy to use, realtime visualization despite of the huge amount of data given from the flood simulation process.

@inproceedings{bender04,

author = {Jan Bender and Dieter Finkenzeller and Peter Oel},

title = {HW3D: A tool for interactive real-time 3D visualization in GIS supported flood modelling},

booktitle = {Proceedings of the 17th international conference on computer animation and social agents},

year = {2004},

address = {Geneva (Switzerland)},

pages = {305-314}

}

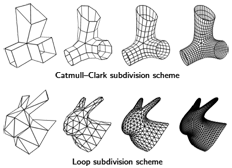

Optimization Techniques for Approximation with Subdivision Surfaces

We present a method for scattered data approximation with subdivision surfaces which actually uses the true representation of the limit surface as a linear combination of smooth basis functions associated with the control vertices. This is unlike previous techniques which used only piecewise linear approximations of the limit surface. By this we can assign arbitrary parameterizations to the given sample points, including those generated by parameter correction. We present a robust and fast algorithm for exact closest point search on Loop surfaces by combining Newton iteration and non-linear minimization. Based on this we perform unconditionally convergent parameter correction to optimize the approximation with respect to the L^2 metric and thus we make a well-established scattered data fitting technique which has been available before only for B-spline surfaces, applicable to subdivision surfaces. Further we exploit the fact that the control mesh of a subdivision surface can have arbitrary connectivity to reduce the L^\infty error up to a certain user-defined tolerance by adaptively restructuring the control mesh. By employing iterative least squares solvers, we achieve acceptable running times even for large amounts of data and we obtain high quality approximations by surfaces with relatively low control mesh complexity compared to the number of sample points. Since we are using plain subdivision surfaces, there is no need for multiresolution detail coefficients and we do not have to deal with the additional overhead in data and computational complexity associated with them.

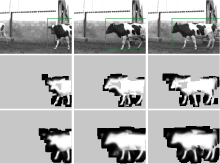

Combined Object Categorization and Segmentation with an Implicit Shape Model

We present a method for object categorization in real-world scenes. Following a common consensus in the field, we do not assume that a figureground segmentation is available prior to recognition. However, in contrast to most standard approaches for object class recognition, our approach automatically segments the object as a result of the categorization. This combination of recognition and segmentation into one process is made possible by our use of an Implicit Shape Model, which integrates both into a common probabilistic framework. In addition to the recognition and segmentation result, it also generates a per-pixel confidence measure specifying the area that supports a hypothesis and how much it can be trusted. We use this confidence to derive a natural extension of the approach to handle multiple objects in a scene and resolve ambiguities between overlapping hypotheses with a novel MDL-based criterion. In addition, we present an extensive evaluation of our method on a standard dataset for car detection and compare its performance to existing methods from the literature. Our results show that the proposed method significantly outperforms previously published methods while needing one order of magnitude less training examples. Finally, we present results for articulated objects, which show that the proposed method can categorize and segment unfamiliar objects in different articulations and with widely varying texture patterns, even under significant partial occlusion.

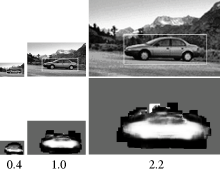

Scale Invariant Object Categorization Using a Scale-Adaptive Mean-Shift Search

The goal of our work is object categorization in real-world scenes. That is, given a novel image we want to recognize and localize unseen-before objects based on their similarity to a learned object category. For use in a realworld system, it is important that this includes the ability to recognize objects at multiple scales. In this paper, we present an approach to multi-scale object categorization using scale-invariant interest points and a scale-adaptive Mean-Shift search. The approach builds on the method from [12], which has been demonstrated to achieve excellent results for the single-scale case, and extends it to multiple scales. We present an experimental comparison of the influence of different interest point operators and quantitatively show the method’s robustness to large scale changes.

Awarded the main prize of the German Pattern Recognition Society (DAGM Best Paper Award)

Interleaved Object Categorization and Segmentation

This thesis is concerned with the problem of visual object categorization, that is of recognizing unseen-before objects, localizing them in cluttered real-world images, and assigning the correct category label. This capability is one of the core competencies of the human visual system. Yet, computer vision systems are still far from reaching a comparable level of performance. Moreover, computer vision research has in the past mainly focused on the simpler and more specific problem of identifying known objects under novel viewing conditions. The visual categorization problem is closely linked to the task of figure-ground segmentation, that is of dividing the image into an object and a non-object part. Historically, figure-ground segmentation has often been seen as an important and even necessary preprocessing step for object recognition. However, purely bottomup approaches have so far been unable to yield segmentations of sufficient quality, so that most current recognition approaches have been designed to work independently from segmentation. In contrast, this thesis considers object categorization and figure-ground segmentation as two interleaved processes that closely collaborate towards a common goal. The core part of our work is a probabilistic formulation which integrates both capabilities into a common framework. As shown in our experiments, the tight coupling between those two processes allows them to profit from each other and improve their individual performances. The resulting approach can detect categorical objects in novel images and automatically compute a segmentation for them. This segmentation is then used to again improve recognition by allowing the system to focus its effort on object pixels and discard misleading influences from the background. In addition to improving the recognition performance for individual hypotheses, the top-down segmentation also allows to determine exactly from where a hypothesis draws its support. We use this information to design a hypothesis verification stage based on the MDL principle that resolves ambiguities between overlapping hypotheses on a per-pixel level and factors out the effects of partial occlusion. Altogether, this procedure constitutes a novel mechanism in object detection that allows to analyze scenes containing multiple objects in a principled manner. Our results show that it presents an improvement over conventional criteria based on bounding box overlap and permits more accurate acceptance decisions. Our approach is based on a highly flexible implicit representation for object shape that can combine the information of local parts observed on different training examples and interpolate between the corresponding objects. As a result, the proposed method can learn object models already from few training examples and achieve competitive object detection performance with training sets that are between one and two orders of magnitude smaller than those used in comparable systems. An extensive evaluation on several large data sets shows that the system is applicable to many different object categories, including both rigid and articulated objects.

Teaching meshes, subdivision and multiresolution techniques

In recent years, geometry processing algorithms that directly operate on polygonal meshes have become an indispensable tool in computer graphics, CAD/CAM applications, numerical simulations, and medical imaging. Because the demand for people that are specialized in these techniques increases steadily the topic is finding its way into the standard curricula of related lectures on computer graphics and geometric modeling and is often the subject of seminars and presentations. In this article we suggest a toolbox to educators who are planning to set up a lecture or talk about geometry processing for a specific audience. For this we propose a set of teaching blocks, each of which covers a specific subtopic. These teaching blocks can be assembled so as to fit different occasions like lectures, courses, seminars and talks and different audiences like students and industrial practitioners. We also provide examples that can be used to deepen the subject matter and give references to the most relevant work.

Direct Anisotropic Quad-Dominant Remeshing

We present an extension of the anisotropic polygonal remeshing technique developed by Alliez et al. Our algorithm does not rely on a global parameterization of the mesh and therefore is applicable to arbitrary genus surfaces. We show how to exploit the structure of the original mesh in order to perform efficiently the proximity queries required in the line integration phase, thus improving dramatically the scalability and the performance of the original algorithm. Finally, we propose a novel technique for producing conforming quad-dominant meshes in isotropic regions as well by propagating directional information from the anisotropic regions.

A Remeshing Approach to Multiresolution Modeling

Providing a thorough mathematical foundation, multiresolution modeling is the standard approach for global surface deformations that preserve fine surface details in an intuitive and plausible manner. A given shape is decomposed into a smooth low-frequency base surface and high-frequency detail information. Adding these details back onto a deformed version of the base surface results in the desired modification. Using a suitable detail encoding, the connectivity of the base surface is not restricted to be the same as that of the original surface. We propose to exploit this degree of freedom to improve both robustness and efficiency of multiresolution shape editing. In several approaches the modified base surface is computed by solving a linear system of discretized Laplacians. By remeshing the base surface such that the Voronoi areas of its vertices are equalized, we turn the unsymmetric surface-related linear system into a symmetric one, such that simpler, more robust, and more efficient solvers can be applied. The high regularity of the remeshed base surface further removes numerical problems caused by mesh degeneracies and results in a better discretization of the Laplacian operator. The remeshing is performed on the low-frequency base surface only, while the connectivity of the original surface is kept fixed. Hence, this functionality can be encapsulated inside a multiresolution kernel and is thus completely hidden from the user.

Phong Splatting

Surface splatting has developed into a valuable alternative to triangle meshes when it comes to rendering of highly detailed massive datasets. However, even highly accurate splat approximations of the given geometry may sometimes not provide a sufficient rendering quality since surface lighting mostly depends on normal vectors whose deviation is not bounded by the Hausdorff approximation error. Moreover, current point-based rendering systems usually associate a constant normal vector with each splat, leading to rendering results which are comparable to flat or Gouraud shading for polygon meshes. In contrast, we propose to base the lighting of a splat on a linearly varying normal field associated with it, and we show that the resulting Phong Splats provide a visual quality which is far superior to existing approaches. We present a simple and effective way to construct a Phong splat representation for a given set of input samples. Our surface splatting system is implemented completely based on vertex and pixel shaders of current GPUs and achieves a splat rate of up to 4M Phong shaded, filtered, and blended splats per second. In contrast to previous work, our scan conversion is projectively correct per pixel, leading to more accurate visualization and clipping at sharp features.

Perspective Accurate Splatting

We present a novel algorithm for accurate, high quality point rendering, which is based on the formulation of the splatting process using homogeneous coordinates. In contrast to previous methods, this leads to perspective correct splat shapes, avoiding artifacts such as holes due to the approximation of the perspective projection. Further, our algorithm implements the EWA resampling filter, hence providing high image quality with anisotropic texture filtering. We also present an extension of our rendering primitive that allows the display of sharp edges and corners. Finally, we describe an efficient implementation of the algorithm based on vertex and fragment programs of current GPUs.

Survey of Point-Based Techniques in Computer Graphics

In recent years point-based geometry has gained increasing attention as an alternative surface representation, both for efficient rendering and for flexible geometry processing of highly complex 3D-models. Point sampled objects do neither have to store nor to maintain globally consistent topological information. Therefore they are more flexible compared to triangle meshes when it comes to handling highly complex or dynamically changing shapes. In this paper, we make an attempt to give an overview of the various point-based methods that have been proposed over the last years. In particular we review and evaluate different shape representations, geometric algorithms, and rendering methods which use points as a universal graphics primitive.

Parameterization-free active contour models

We present a novel approach for representing and evolving deformable active contours by restricting the movement of the contour vertices to the grid-lines of a uniform lattice. This restriction implicitly controls the (re-) parameterization of the contour and hence makes it possible to employ parameterization independent evolution rules. Moreover, the underlying uniform grid makes self-collision detection very efficient. Our contour model is also able to perform topology changes but - more importantly - it can detect and handle self-collisions at sub-pixel precision. In applications where topology changes are not appropriate we generate contours that touch themselves without any gaps or self-intersections.

Subdivision Scheme Tuning Around Extraordinary Vertices

In this paper we extend the standard method to derive and optimize subdivision rules in the vicinity of extraordinary vertices (EV). Starting from a given set of rules for regular control meshes, we tune the extraordinary rules (ER) such that the necessary conditions for C1 continuity are satisfied along with as many necessary C2 conditions as possible. As usually done, our approach sets up the general configuration around an EV by exploiting rotational symmetry and reformulating the subdivision rules in terms of the subdivision matrix' eigencomponents. The degrees of freedom are then successively eliminated by imposing new constraints which allows us, e.g., to improve the curvature behavior around EVs. The method is flexible enough to simultaneously optimize several subdivision rules, i.e. not only the one for the EV itself but also the rules for its direct neighbors. Moreover it allows us to prescribe the stencils for the ERs and naturally blends them with the regular rules that are applied away from the EV. All the constraints are combined in an optimization scheme that searches in the space of feasible subdivision schemes for a candidate which satisfies some necessary conditions exactly and other conditions approximately. The relative weighting of the constraints allows us to tune the properties of the subdivision scheme according to application specific requirements. We demonstrate our method by tuning the ERs for the well-known Loop scheme and by deriving ERs for a \sqrt{3}-type scheme based on a 6-direction Box-spline.

View-Dependent Streaming of Progressive Meshes

Multiresolution geometry streaming has been well studied in recent years. The client can progressively visualize a triangle mesh from the coarsest resolution to the finest one while a server successively transmits detail information. However, the streaming order of the detail data usually depends only on the geometric importance, since basically a mesh simplification process is performed backwards in the streaming. Consequently, the resolution of the model changes globally during streaming even if the client does not want to download detail information for the invisible parts from a given view point. In this paper, we introduce a novel framework for view-dependent streaming of multiresolution meshes. The transmission order of the detail data can be adjusted dynamically according to the visual importance with respect to the client's current view point. By adapting the truly selective refinement scheme for progressive meshes, our framework provides efficient view-dependent streaming that minimizes memory cost and network communication overhead. Furthermore, we reduce the per-client session data on the server side by using a special data structu re for encoding which vertices have already been transmitted to each client. Experimental results indicate that our framework is efficient enough for a broadcast scenario where one server streams geometry data to multiple clients with different view points.

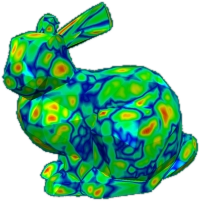

GPU-based Tolerance Volumes for Mesh Processing

In an increasing number of applications triangle meshes represent a flexible and efficient alternative to traditional NURBS-based surface representations. Especially in engineering applications it is crucial to guarantee that a prescribed approximation tolerance to a given reference geometry is respected for any combination of geometric algorithms that are applied when processing a triangle mesh. We propose a simple and generic method for computing the distance of a given polygonal mesh to the reference surface, based on a linear approximation of its signed distance field. Exploiting the hardware acceleration of modern GPUs allows us to perform up to 3M triangle checks per second, enabling real-time distance evaluations even for complex geometries. An additional feature of our approach is the accurate high-quality distance visualization of dynamically changing meshes at a rate of 15M triangles per second. Due to its generality, the presented approach can be used to enhance any mesh processing method by global error control, guaranteeing the resulting mesh to stay within a prescribed error tolerance. The application examples that we present include mesh decimation, mesh smoothing and freeform mesh deformation.

Topologically Correct Extraction of the Cortical Surface of a Brain Using Level-Set Methods

In this paper we present a level-set framework for accurate and efficient extraction of the surface of a brain from MRI data. To prevent the so-called partial volume effect we use a topology preserving model that ensures the correct topology of the surface at all times during the reconstruction process. We also describe improvements that enhance its stability, accuracy and efficiency. The resulting reconstruction can then be used in downstream applications where we in particular focus on the problem of accurately measuring geodesic distances on the surface.

Previous Year (2003)